This is the second post in a series I'm doing about whether I can trade faster strategies than I currently do, without being destroyed by high trading costs. The series is motivated in the first post, here.

In this post, I see if it's possible to 'smuggle in' high cost trading strategies, due to the many layers of position sizing, buffering and optimisation that sit between the underlying forecast and the final trades that are done. Of course, it's also possible that the layering completely removes the effect of the high cost strategy!

Why might we want to do this? Well fast trend following strategies in particular have some nice properties, as discussed in this piece by my former employers AHL. And fast mean reversion strategies, of the type I discuss in part four of my forthcoming book, are extremely diversifiying versus medium and slow speed trend following.

It's a nice piece, but I'm a bit cross they have taken another of the possible 'speed/fast' cultural references that I planned to use in this series.

Full series of posts:

- In the first post, I explored the relationshipd between instrument cost and momentum performance.

- This is the second post

My two trading strategies

It's worth briefly reviewing how my two trading strategies actually work (the one I traded until about a year ago, and the one I currently trade).

Both strategies start off the same way; I have a pool of trading rule variations that create forecasts for a given instrument. What is a trading rule variation? Well a trading rule would be something like a breakout rule with an N day lookback. A variation of that rule is a specific parameter value for N. How do we decide which instruments should use which trading rule variations? Primarily, that decision is based around costs. A variation that trades quickly - has a higher forecast turnover - like a breakout rule with a small value for N, wouldn't be suitable for an instrument with a high risk adjusted cost per trade.

Once I have a set of trading rule variations for a given instrument, I take a weighted average of their forecast values, which gives me a combined forecast. Note that I use equal weights to keep things simple. That forecast will change less for expensive instruments. I then convert those forecasts into idealised positions in numbers of contracts. At this stage these idealised numbers are unrounded. During that process there will be some additional turnover introduced by the effect of scaling positions for volatility, changes in price, movements in FX rates and changes in capital.

For my original trading system (as described in some detail in my first and third books), I then use a cost reduction technique known as buffering (or position inertia in my first book Systematic Trading). Essentially this resolves the unrounded position to a rounded position, but I only trade if my current position is outside of a buffer around the idealised position. So if the idealised position moves a small amount, we don't bother trading.

Importantly, the buffering width I use is the same for all instruments (10% of an average position); actually in theory it should be wider for expensive instruments and narrower for cheaper instruments.

My new trading system uses a technique called dynamic optimisation ('DO'), which tries to trade the portfolio of integer positions that most closely match the idealised position, bearing in mind I have woefully insufficient capital to trade an idealised portfolio with over 100 instruments. You can read about this in the new book, or for cheapskates there is a series of blogposts you can read for free.

As far as slowing down trading goes, there are two stages here. The first is that when we optimise our positions, we consider the trades required, and penalise expensive trades. I use the actual trading cost here, so we'll be less likely to trade in more expensive instruments. The second stage involves something similar to the buffering technique mentioned above, except that it is applied to the entire set of trades. More here. In common with the buffer on my original trading strategy, the width of the buffer is effectively the same for every instrument.

Finally for both strategies, there will be additional trading from rolling to new futures contracts.

To summarise then, the following will determine the trading frequency for a given instrument:

- The set of trading rule variations we have selected, using per instrument trading costs.

- The effect of rolling, scaling positions for volatility, changes in price, movements in FX rates (and in production, but not my constant capital backtests, changes in capital).

- In my original system, a buffer that's applied to each instrument position, with a width that is invariant to per instrument trading cost.

- In my new DO system, a cost penalty on trading which is calculated using per instrument trading cost.

- In my new DO system, a buffer that's applied to all trades in one go, with a width that is invariant to per instrument trading cost.

(There are some slight simplifications here; I'm missing out some of the extra bits in my strategy such as vol attenuation and a risk overlay which may also contribute to turnover)

There are some consequeces of this. One is that even if you have a constant forecast (the so-called 'asset allocating investor' in Systematic Trading), you will still do some trading because of the effects listed under point 2. Another is that if you are trading very quickly, it's plausible that quite a lot of that trading will get 'soaked up' by stage 3, or stages 4 and 5 if you're running DO.

It's this latter effect we're going to explore in this post. My thesis is that we might be able to include a faster trading trading rule variation alongside slower variations, as we'll get the following behaviour: Most of the time the faster rule will be 'damped out' by stages 4 to 6, and we'll effectively only be trading the slower trading rule variations. However when it has a particularly large effect on our forecasts, then it will contribute to our positions, giving us a little extra alpha. That's the idea anyway.

Rolling the pitch

Before doing any kind of back-testing around trading costs, it's important to make sure we're using accurate numbers. This is particularly important for me, as I've recently added another few instruments to my database, and I now have over 200 (206 to be precise!), although without duplicates like micro/mini futures the figure comes down to 176.

First I double checked that I had the right level of commissions in my configuration file, by going through my brokerage trade report (sadly this is a manual process right now). It turns out my broker has been inflating comissions a bit since I last checked, and there were also some errors and ommissions.

Next I checked I had realistic levels for trading spreads. For this I have a report and a semi-automated process that updates my configuration using information from both trades and regular price samples.

Since I was in spring cleaning mode (OK, it's autum in the UK, but I guess it's spring in the southern hemisphere?) I also took the opportunity to update my list of 'bad markets' that are too illiquid or costly to trade, and also my list of duplicate markets where I have the choice of trading e.g. the mini or micro future for a given instrument. Turns out quite a few of the recently added instruments are decently liquid micro futures, which I can trade instead of the full fat alternatives.

At some point I will want to change my instrument weights to reflect these changes, but I'm going to hold fire until after I've finished this research. It will also make more sense to do this in April, when I do my usual end of year review. If I wait until then, it will make it easier to compare backtested and live results for the last 12 months.

Changes in turnover

To get some intuition about the effect of these various effects, I'm going to start off testing one of my current trading rules: exponentially weighted moving average crossover (EWMAC). There are 6 variations of this rule that I trade, ranging from EWMAC4,16 (which is very fast), up to EWMAC64,25 (slow).

To start with, let's measure the different turnover of forecasts and positions for each of these trading rules as we move through the following stages:

- Trading rule forecast

- Raw position before buffering

- Buffered position

I will use the S&P 500 as my arbitrary instrument here, but in practice it won't make much difference - I could even use random data to get a sensible answer here.

forecast raw_position buffered_position

4 61.80 52.63 49.81

8 31.13 27.80 24.98

16 16.32 16.23 13.88

32 9.69 11.44 8.92

64 7.46 10.16 7.04

Long 0.00 2.53 2.14

Obviously, the turnover of the forecast slows as we increase the span of the EWMAC in the first column. The final row shows a constant forecast rule, which obviously has a turnover of zero. In the next column is the turnover of the raw position. For very slow forecasts, this is higher than for the underyling forecast, as we do tradings for the reasons outlined above (the effect of rolling, scaling positions for volatility, changes in price and movement in FX rates). As the final row shows, this imposes a lower bound on turnover no matter how slow your forecasts are. However for very fast forecasts, the position turnover is actually a little lower than the forecast turnover. This is a hint that 'smuggling in' may have some promise.

Now consider the buffered position. Obviously this has a lower turnover than the raw position. The reduction is proportionally higher for slower trading rules: it's about a 5% reduction for ewmac4 and more like 30% for the very slowest momentum rule. Curiously, the buffering has less of an effect on the long only constant forecast rule than on ewmac64.

All of this means that something we think has a turnover of over 60 (ewmac4) will actually end up with a turnover of more like 50 after buffering. That is a 17% reduction.

Don't get too excited yet, because turnover will be higher in a multi instrument portfolio, because of the effect of instrument diversification: turnover will be roughly equal to the IDM multiplied by the turnover for a single instrument, and the IDM for my highly diversified portfolio here is around 2.0.

Now, what about the effects of dynamic optimisation. Because dynamic optimisation only makes sense across instruments, I'm going to do this exercise for 50 or so randomly selected instruments (50 rather than 200 to save time running backtests - it won't affect the results much).

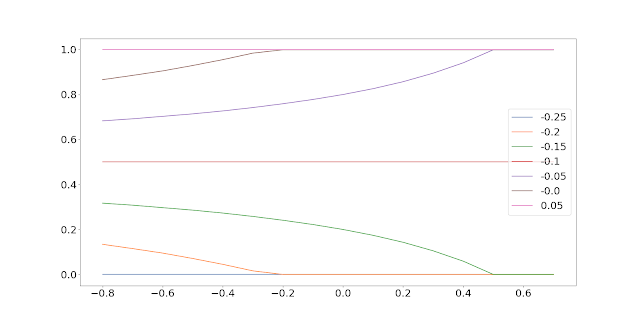

The y-axis shows the turnover, with each line representing a different trading speed.

The x-axis labels are as follows:

- The total turnover of the strategy before any dynamic optimisation takes place; this is analogous to the raw position in the table above. Again this is higher than the figures for the S&P 500 above because of the effect of instrument diversification.

- The total turnover of the strategy after dynamic optimisation, but without any cost penalty or buffering.

- The total turnover of the strategy after dynamic optimisation, with a cost penalty, but with no buffering.

- The total turnover of the strategy after dynamic optimisation, without a cost penalty, but with buffering.

- The total turnover of the strategy after dynamic optimisation, with a cost penalty and buffering.

Interestingly the optimisation adds a 'fixed cost' of turnover to the strategy of extra turnover per year, although this does not happen with the fastest rule. Both buffering and the trading cost penalty reduce the turnover, although the cost penalty has the larger standalone effect. Taken together, costs and buffering reduce turnover significantly, between around a half and a third.

What does this all mean? Well it means we probably have a little more headroom than we think when considering whether a particular trading rule is viable, since it's likely the net effect of position sizing plus buffering will slow things down. This isn't true for the very slowest trading rules with dynamic optimisation which can't quite overcome the turnover increase from position sizing, but they this is unlikely to be an issue for the cheaper instruments where we'd consider adding a faster trading rule.

Changes in costs (dynamic optimisation)

You might expect higher turnover to always linearly lead to higher costs. That's certainly the case for the simple one instrument, S&P 500 only, setup above. But this is not automatically the case for dynamic optimisation. Indeed, we can think of some pathological examples where the turnover is much higher for a given strategy, but costs are lower, because the DO has chosen to trade instrument(s) with lower costs.

In fact the picture here is quite similar to turnover, so the point still stands. We can knock off about 1/3 of the costs of trading the very fastest EWMA through the use of dynamic optimisation with a cost penalty (and buffering also helps). Even with the slowest of our EWMA we still see a 25% reduction in costs.

Forecast combination

Now let us move from a simple world in which we are selecting a single momentum rule, and foolishly trading it on every instrument we own regardless of costs, to one in which we trade multiple momentum rules.

There is another effect at work in a full fledged trading strategy, that won't be obvious from the isolated research we've done so far, and that is forecast combination. If we introduce a new fast trading rule, we're unlikely to give it 100% of the forecast weights. This means that it's effect on the overall turnover of the strategy will be limited.

To take a simple example, suppose we're trading a strategy with a forecast turnover of 15, leading to a likely final turnover of ~13.3 after buffering and what not (as explained above). Now we introduce a new trading rule with a 10% allocation, that has a turnover of 25. If the trading rule has zero correlation with the other rules, then our forecast turnover will increase to (0.9 * 15) + (0.1 * 25) = 16. After buffering and what not the final turnover will be around 14.0. A very modest increase really.

This is too simplified. If a forecast really is uncorrelated, then it adding it will increase the forecast diversification multiplier (FDM), which will increase the turnover of the final combined forecast. But if the forecast is highly correlated, then the raw turnover will increase by more than we expect. In both of these cases get slightly more turnover; so things will be a little worse than we expect.

Implications for the speed limit

A reminder: I have a trading speed limit concept which states that I don't want to allocate more than third of my expected pre-cost Sharpe Ratio towards trading costs. For an individual trading rule on a single instrument, that equates to a maximum of around 0.13 or 0.10 SR annual units to be spent on costs, depending on which of my books you are reading (consistency is for the hoi polloi). The logic is that the realistic median performance for an individual instrument is unlikely to be more than 0.40 or 0.30 SR.

(At a portfolio level we get higher costs because of additional leverage from the instrument diversification multiplier, but as long as the realised improvement in Sharpe Ratio is at least as good as that we'll end up paying the same or a lower proportion in expected costs).

How does that calculation work in practice? Suppose you are trading an instrument which rolls quarterly, and you have a cost of 0.005 SR units per trade. The maximum turnover for a forecast to meet my speed limit, and thus be included in the forecast combination for a given instrument, assuming a speed limit of 0.13 SR units is:

Annual cost, SR units = (forecast turnover + rolls per year) * cost per trade

Maximum annual cost, SR units = (maximum forecast turnover + rolls per year) * cost per trade

Maximum forecast turnover = (Maximum annual cost / cost per trade) - rolls per year

Maximum forecast turnover = (0.13 / 0.005) - 4 = 22

However that ignores the effect of everything we've discussed so far:

- forecast combination

- the FDM (adds leverage, makes things worse)

- other sources of position turnover, mainly vol scaling (makes things better for very fast rules)

- the IDM multiplier (adds leverage, makes things worse)

- buffering (static system) - makes things better

- buffering and cost penalty (DO) - makes things better

Of course it's better, all other things being equal, to trade more slowly and spend less on costs but all of this suggests we probably do have room to make a modest allocation to a relatively fast trading rule without it absolutely killing us on trading costs.

An experiment with combined forecasts

Let's setup the following experiment. I'm interested in three different setups:

- Allocating only to the very slowest three momentum speed (regardless of instrument cost, equally weighted)

- Allocating only to the very fastest three momentum speeds (regardless of instrument cost, equally weighted)

- Allocating conditionally to momentum speeds depending on the costs of an instrument and the turnover of the trading rule, ensuring I remain below the 'speed limit'. This is what I do now. Note that this will imply that some instruments are excluded.

- Allocating to all six momentum speeds in every instrument (regardless of instrument cost, equally weighted)

1. is a fast system, whilst 2. is a 'slow' system (it's not that slow!). In the absence of costs, we would probably want to trade them both, given the likely diversification and other benefits. Options 3 and 4 explore two different ways of doing that. Option 3 involves throwing away trading rules that are too quick for a given instrument, whilst option 4 ploughs on hoping everything will be okay.

How should we evaluate these? Naturally, we're probably most interested in the turnover and costs of options 3 and 4. It will be interesting to see if the costs of option 4 are a hell of a lot higher, or if we are managing to 'smuggle in'.

What about performance? Pure Sharpe ratio is one way, but may give us a mixed picture. In particular, the pre-cost SR of the faster rules has historically been worse than the slower rules. The fourth option will produce a 50:50 split between the two, which is likely to be sub-optimal. Really what we are interested in here is the 'character' of the strategies. Hence a better way is to run regressions of 3 and 4 versus 1 and 2. This will tell us the implicit proportion of fast trading that has survived the various layers between forecast and position.

Nerdy note: Correlations between 1 and 2 are likely to be reasonably high (around 0.80), but not enough to cause problems with co-linearity in the regression.

To do this exercise I'm going to shift to a series of slightly different portfolio setups. Firstly, I will use the full 102 instruments in my 'jumbo portfolio'. Each of these has met a cutoff for SR costs per transaction. I will see how this does for both the static set of instruments (using a notional $50 million to avoid rounding errors), but also for the dynamic optimisation (using $500K).

However I'm also going to run my full list of 176 instruments only for dynamic optimisation, which will include many instruments that are far too expensive to meet my SR cost cutoff or are otherwise too illiquid (you can see a list of them in

this report; there are about 70 or so at the time of writing; there is no point doing this for static optimisation as the costs would be absolutely penal for option 4). I will consider two sub options here: forming forecasts for these instruments but not trading them (which is my current approach), and allowing them to trade (if they can survive the cost penalty, which I will still be applying).

Note that I'm going to fit instrument weights (naturally in a robust, 'handcrafted' setup using only correlations). Otherwise I'd have an unbalanced portfolio, since there are more equities in my data set than other instruments.

To summarise then we have the following four scenarios in which to test the four options:

- Static system with 102 instruments ($50 million capital)

- Dynamic optimisation with 102 instruments ($500k)

- Dynamic optimisation with 176 instruments, constraining around 70 expensive or illiquid instruments from trading ($500k)

- Dynamic optimisation with 176 instruments, allowing expensive instruments to trade (but still applying a cost penalty) ($500k)

Results

Let's begin as before by looking at the total turnover and costs. Each line on the graph shows a different scenario:

- (Static) Static system with 102 instruments ($50 million capital)

- (DO_cheap) Dynamic optimisation with 102 instruments ($500k), which excludes expensive and illiquid instruments

- (DO_constrain) Dynamic optimisation with 176 instruments, constraining around 70 expensive or illiquid instruments from trading ($500k)

- (DO_unconstrain) Dynamic optimisation with 176 instruments, allowing expensive instruments to trade (but still applying a cost penalty) ($500k)

The x-axis show the different options:

- (slow) Allocating only to the very slowest three momentum speed (regardless of instrument cost, equally weighted)

- (fast) Allocating only to the very fastest three momentum speeds (regardless of instrument cost, equally weighted)

- (condition) Allocating conditionally to momentum speeds depending on the costs of an instrument and the turnover of the trading rule, ensuring I remain below the 'speed limit'. This is what I do now. Note that this will imply that some instruments are excluded completely.

- (all) Allocating to all six momentum speeds in every instrument (regardless of instrument cost, equally weighted)

First the turnovers

Now the costs (in SR units):

These show a similar pattern, but the difference between lines is more marked for costs. Generally speaking the static system is the most expensive way to trade anything. This is despite the fact that it does not have any super expensive instruments, since these have already been weeded out. Introducing DO with a full set of instruments, including many that are too expensive to trade, and allowing all of them to trade still reduces costs by around 20% when trading the three fastest rules or all six rules together.

Preventing the expensive instruments from trading (DO_constrain) lowers the costs even further, by around 30% [Reminder: This is what I currently do]. Completely removing expensive instruments provides a further reduction, but it is negligible.

Conditionally trading fast rules, as I do now, allows us to trade pretty much at the same cost level as a slow system: it's only 1 basis point of SR more expensive. But trading all trading rules for all instruments is a little more pricey.

Now how about considering the 'character' of returns? For each of the options 3 and 4, I am going to regress their returns on the returns of option 1 and option 2. The following tables shows the results. Each row is a scenario, and the columns show the betas on 'slow' (option 1) and 'slow' respectively. I've resampled returns to a monthly frequency to reduce the noise.

First let's regress the returns from a strategy that uses *all* the trading rules for every instrument.

fast slow

static 0.590 0.557

DO_cheap 0.564 0.557

DO_constrain 0.550 0.561

DO_unconstrain 0.535 0.559

Each individual instrument is 50% fast, 50% slow, so this is exactly what we would expect with about half the returns of the combined strategy coming from exposure to the fast strategy, and about half from the slow (note there is no constraint for the Betas to add up to one, and no reason why they would do so exactly).

Now let's regress the returns from a conditional strategy on the fast and slow strategies in each scenario:

fast slow

static 0.762 0.337

DO_cheap 0.743 0.313

DO_constrain 0.805 0.288

DO_unconstrain 0.786 0.271

This is.... surprising! About 75% of the returns of the conditional strategy come from exposure to the fast trading rules, and 25% from the slow ones. By only letting the cheapest instruments trade the fast strategy, we've actually made the overall strategy look more like a fast strategy.

Conclusions

This has been a long post! Let me briefly summarise the implications.

- Buffering in a static system reduces turnover, and thus costs, by 17% on a very fast strategy giving us a little more headroom on the 'speed limit' that we think we have.

- Dynamic optimisation has the same effect, but is more efficient reducing costs by around a third; as unlike static buffering the cost penalty is instrument specific.

- It's worth preventing expensive instruments from trading in DO, as the cost penalty doesn't seem to be 100% efficient in preventing them from trading. But there isn't any benefit in completely excluding these expensive instruments from the forecast construction stage.

- Surprisingly, allowing expensive instruments to trade quicker trading rules actually makes a strategy less correlated to a faster trading strategy. It also increases costs by around 50% versus the conditional approach (where only cheap instruments can trade quick rules).

Good news: all of this is a confirmation that what I'm currently doing* is probably pretty optimal!

* running DO with expensive instruments, but not allowing them to trade, and preventing expensive instruments from using quicker trading rules.

Bad news: it does seem that my original idea of just trading more fast momentum, in the hope of 'smuggling in' some more diversifying trading rules, is a little dead in the water.

In the next post, I will consider an alternative way of 'smuggling in' faster trading strategies - by using them as an execution overlay on a slower system.