There has been a very interesting discussion on twitter, relating to some stuff said by Sam Bankman-Fried (SBF), who at the time of writing has just completely vaporized billions of dollars in record time via the medium of his crypto exchange FTX, and provided a useful example to future school children of the meaning of the phrase nominative determinism*.

* Sam, Bank Man: Fried. Geddit?

Read the whole thread from the top:

https://twitter.com/breakingthemark/status/1591114381508558849

TLDR the views of SBF can be summarised as follows:

- Kelly criterion maximises log utility

- I don't have a log utility function. It's probably closer to linear.

- Therefore I should bet at higher than Kelly. Up to 5x would be just fine.

* It's unclear whether this is specifically what brought SBF down. At the time of writing he appears to have taken money from his exchange to prop up his hedge fund, so maybe the hedge fund was using >>> Kelly leverage, and this really is the case.

I've discussed parts of this subject briefly before, but you don't need to read the previous post.

Scope and assumptions

To keep it tight, and relevant to finance, this post will ignore arguments seen on twitter related to one off bets, and whether you should bet differently if you are considering your contribution to society as a whole. These are mostly philosophical discussions which it's hard to solve with pictures. So the set up we have is:

- There is an arbitrary investment strategy, which I assume consists of a data generating process (DGP) producing Gaussian returns with a known mean and standard deviation (this ignores parameter uncertainty, which I've banged on about often enough, but effectively would result in even lower bet sizing).

- We make a decision as to how much of our capital we allocate to this strategy for an investment horizon of some arbitrary number of years, let's say ten.

- We're optimising L, the leverage factor, where L =1 would be full investment, 2 would be 100% leverage, 0.5 would be 50% in cash 50% in the strategy and so on.

- We're interested in maximising the expectation of f(terminal wealth) after ten years, where f is our utility function.

- Because we're measuring expectations, we generate a series of possible future outcomes based on the DGP and take the expectation over those.

Specific parameters

import pandas as pd

import numpy as np

from math import log

ann_return = 0.1

ann_std_dev = 0.2

BUSINESS_DAYS_IN_YEAR = 256

daily_return = ann_return / BUSINESS_DAYS_IN_YEAR

daily_std_dev = ann_std_dev / (BUSINESS_DAYS_IN_YEAR**.5)

years = 10

number_days = years * BUSINESS_DAYS_IN_YEAR

def get_series_of_final_account_values(monte_return_streams,

leverage_factor = 1):

account_values = [account_value_from_returns(returns,

leverage_factor=leverage_factor)

for returns in monte_return_streams]

return account_values

def get_monte_return_streams():

monte_return_streams = [get_return_stream() for __ in range(10000)]

return monte_return_streams

def get_return_stream():

return np.random.normal(daily_return,

daily_std_dev,

number_days)

def account_value_from_returns(returns, leverage_factor: float = 1.0):

one_plus_return = np.array(

[1+(return_item*leverage_factor)

for return_item in returns])

cum_return = one_plus_return.cumprod()

return cum_return[-1]

monte_return_streams = get_monte_return_streams()

Utility function: Expected log(wealth) [Kelly]

def expected_log_value(monte_return_streams,

leverage_factor = 1):

series_of_account_values =get_series_of_final_account_values(

monte_return_streams = monte_return_streams,

leverage_factor = leverage_factor)

log_values_over_account_values = [log(account_value) for account_value in series_of_account_values]

return np.mean(log_values_over_account_values)

And let's plot the results:

def plot_over_leverage(monte_return_streams, value_function):

leverage_ratios = np.arange(1.5, 5.1, 0.1)

values = []

for leverage in leverage_ratios:

print(leverage)

values.append(

value_function(monte_return_streams, leverage_factor=leverage)

)

leverage_to_plot = pd.Series(

values, index = leverage_ratios

)

return leverage_to_plot

leverage_to_plot = plot_over_leverage(monte_return_streams,

expected_log_value)

leverage_to_plot.plot()

In this plot, and nearly all of those to come, the x-axis shows the leverage L and the y-axis shows the value of the expected utility. To find the optimal L we look to see where the highest point of the utility curve is.

As we'd expect:

- Max expected log(wealth) is at L=2.5. This is the optimal Kelly leverage factor.

- At twice optimal we expect to have log wealth of zero, equivalent to making no money at all (since starting wealth is 1).

- Not plotted here, but at SBF leverage (12.5) we'd have expected log(wealth) of <undefined> and have lost pretty much all of our money.

Utility function: Expected (wealth) [SBF?]

Now let's look at a linear utility function, since SBF noted that his utility was 'roughly close to linear'. Here our utility is just equal to our terminal wealth, so it's purely linear.

def expected_value(monte_return_streams,

leverage_factor = 1):

series_of_account_values =get_series_of_final_account_values(

monte_return_streams = monte_return_streams,

leverage_factor = leverage_factor)

return np.mean(series_of_account_values)leverage_to_plot = plot_over_leverage(monte_return_streams,

expected_value)

Utility function: Median(wealth)

- An investment that lost $1,000 99 times out of 100; and paid out $1,000,000 1% of the time

- An investment that is guaranteed to gain $9,000

def median_value(monte_return_streams,

leverage_factor = 1):

series_of_account_values =get_series_of_final_account_values(

monte_return_streams = monte_return_streams,

leverage_factor = leverage_factor)

return np.median(series_of_account_values)

The spooky result here is that the optimal leverage is now 2.5, the same as the Kelly criterion.

Even with linear utility, if we use the median expectation, Kelly is the optimal strategy.

The reason why people prefer to use mean(log(wealth)) rather than median(wealth), even though they are equivalent, is that the former is more computationally attractive.

Note also the well known fact that Kelly also maximises the geometric return.

With Kelly we aren't really making any assumptions about utility function: our assumption is effectively that the median is the correct expectations operator

The entire discussion about utility is really a red herring. It's very hard to measure utility functions, and everyone probably does have a different one, I think it's much better to focus on expectations.

Utility function: Nth percentile(wealth)

Well you might be thinking that SBF seems like a particularly optimistic kind of guy. He isn't interested in the median outcome (which is the 50% percentile). Surely there must be some percentile at which it makes sense to bet 5 times Kelly? Maybe he is interested in the 75% percentile outcome?

QUANTILE = .75

def value_quantile(monte_return_streams,

leverage_factor = 1):

series_of_account_values =get_series_of_final_account_values(

monte_return_streams = monte_return_streams,

leverage_factor = leverage_factor)

return np.quantile(series_of_account_values, QUANTILE)

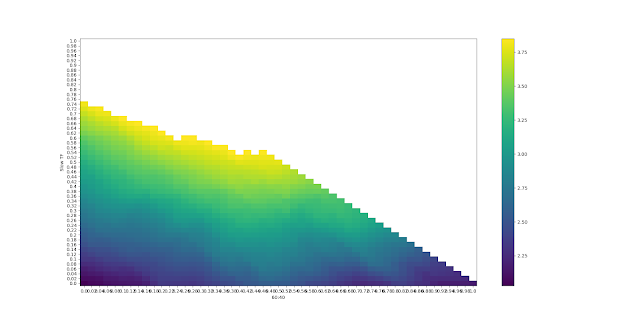

Now the optimal is around L=3.5. This is considerably higher than the Kelly max of L=2.5, but it is still nowhere near the SBF optimal L of 12.5.

Let's plot the utility curves for a bunch of different quantile points:

list_over_quantiles = []

quantile_ranges = np.arange(.4, 0.91, .1)

for QUANTILE in quantile_ranges:

leverage_to_plot = plot_over_leverage(monte_return_streams,

value_quantile)

list_over_quantiles.append(leverage_to_plot)

pd_list = pd.DataFrame(list_over_quantiles)

pd_list.index = quantile_ranges

pd_list.transpose().plot()It's hard to see what's going on here, legend floating point representation notwithstanding, but you can hopefully see that the maximum L (hump of each curve) gets higher as we go up the quantile scale, as the curves themselves get higher (as you would expect).

But in none of these quantiles we are still nowhere near reaching an optimal L of 12.5. Even at the 90% quantile - evaluating something that only happens one in ten times - we have a maximum L of under 4.5.

Now there will be some quantile point at which L=12.5 is indeed optimal. Returning to my simple example:

- An investment that lost $1,000 99 times out of 100; and paid out $1,000,000 1% of the time

- An investment that is guaranteed to gain $9,000

... if we focus on outcomes that will happen less than one in a million times (the 99.9999% quantile and above) then yes sure, we'd prefer option 1.

So at what quantile point does a leverage factor of 12.5 become optimal? I couldn't find out exactly, since to look at extremely rare quantile points requires very large numbers of outcomes*. I actually broke my laptop before I could work out what the quantile point was.

* for example, if you want ten observations to accurately measure the quintile point, then for the 99.99% quantile you would need 10* (1/(1-0.9999)) = 100,000 outcomes.

But even for a quantile of 99.99% (!), we still aren't at an optimal leverage of 12.5!

You can see that the optimal leverage is 8 (around 3.2 x Kelly), still way short of 12.5.

Summary

Note I am not saying I am smarter than SBF. On pure IQ, I am almost certainly much, much dumber. In fact, it's because I know I am not a genius that I'm not arrogant enough to completely follow or ignore the Kelly criteria without first truely understanding it.

Whilst this particular misunderstanding might not have brought down SBF's empire, it shows that really really smart people can be really dumb - particularly when they think that they are so smart they don't need to properly understand something before ignoring it*.

* Here is another example of him getting something completely wrong

Postscript (16th November 2022)

- 100% win $1

- 51% win $0 / 49% win $1'000'000

- An investment that lost $1,000 99 times out of 100; and paid out $1,000,000 1% of the time

- An investment that is guaranteed to gain $9,000

- An investment that lost $1 most of the time; and paid out $1,000,000 0.001% of the time

- An investment that is guaranteed to gain $5

- An investment that lost $5000 95.1% of the time, and makes $1 million or more 5% of the time.

- An investment that is guaranteed to gain $25,000

.jpg)