This is part two in a series of posts about using optimisation to get the best possible portfolio given a relatively small amount of capital.

- Part one is here (where I discussed the idea of using dynamic optimisation to handle this problem). You should read that now, if you haven't already done so.

- In this post I show you and explain the code and methodology used for the backtesting of this idea, and look at the results.

- In the next post I look at a way to find the best static subset of markets given a particular account size. This turns out to be the best method

- In the final post I try a heuristic ranking process.

The code is in my open source backtesting engine,

pysystemtrade. However even if you don't use that, I'll be showing you snippets of python you can steal for your own trading systems.

TLDR: This doesn't work

The test case

As a test case I generated a portfolio with 20 instruments and a puny $25,000 in capital. This really isn't enough capital for that many instruments! As proof, here are the maximum positions taken in the original system without any optimisation (using only the period when I have data for all 20 instruments; much earlier for example when only Corn was trading it would have been able to take a position of 3 contracts):

system.risk.get_original_buffered_rounded_positions_df()[datetime.datetime(2015,1,1):].abs().max()

AUD 0.0

BUND 0.0

COPPER 0.0

CORN 0.0

CRUDE_W 0.0

EDOLLAR 1.0

EUROSTX 1.0

GAS_US 0.0

GBP 0.0

GOLD 0.0

LEANHOG 0.0

LIVECOW 0.0

MXP 1.0

OAT 0.0

SP500 0.0

US10 0.0

US2 2.0

V2X 3.0

VIX 0.0

WHEAT 0.0

You can see that we only have adequate discretisation of positions in a couple of contracts, and no position at all in many of them. I'd be unable to use the forecast mapping technique I discussed

here to fix this problem.

Get a vector of desired portfolio weights

So first step in the optimisation is to get the current vector of desired contract positions, expressed in portfolio weights. I've written the code such that you can do the optimisation for a specific date; and if no date is given it uses the last row of positions. This obviously is handy for the production implementation, which will only have to a single optimisation every night (but that's in the next post).

system.expectedReturns.get_portfolio_weights_for_relevant_date()

{'AUD': 0.0893, 'BUND': 0.695, 'COPPER': 0.0472, 'CORN': 0.219

What do these numbers mean, and where do they come from? Let's take Corn as an example. The final optimal position for Corn is 0.23 contracts:

system.portfolio.get_notional_position("CORN").tail(1)

index

2021-03-08 0.2284

Of course we can't hold 0.23 contracts, but that is why we are here, with me writing and you reading this post.

Now what is a single futures contract for Corn worth? It's going to be the price ($481 at the end of this data series), times the value per price point (fixed at $50), multiplied by the FX rate ($1 = $1 here).

We can get this value directly:

system.expectedReturns.get_baseccy_value_per_contract("CORN").tail(1)

index

2021-03-08 24062.5

And as a proportion of our capital ($25,000) that's going to be just under 1:

system.expectedReturns.get_per_contract_value_as_proportion_of_capital("CORN").tail(1)

index

2021-03-08 0.9625Since we want to hold 0.23 of a contract, our desired portfolio weight is going to be 0.23 * 0.96 = 0.22. This brings us back to where we started: the desired portfolio weight in Corn is (long) 0.22 units of our trading capital (a short position would show as a negative portfolio weight).

Estimate a covariance matrix

We now need a covariance matrix, which we construct from a correlation and a standard deviation.

In the last post I had a debate as to whether I should use the correlation of trading strategy (subsystem) returns or of underlying instrument returns. Well when I tested this, I found out that the latter was much better at targeting the correct level of risk (I discuss this later in the post) and with a lower tracking error (which was the main criteria I was worried about), whilst not producing more instable portfolios or higher trading costs (which might be expected, given the shorter lookback I used for estimating the latter - more of that in a moment).

Also there were certain characteristics of the trading strategy subsystem correlation that I didn't like, and I would have had to re-estimate them completely (having already estimated them to use for calculating instrument weights and IDM), which seemed a bit dumb [those characteristics will become clear in a second].

Here's the configuration for the instrument return correlation estimation (some bolierplate removed):

# small system optimisation

small_system:

instrument_returns_correlation:

func: sysquant.estimators.correlation_over_time.correlation_over_time_for_returns

frequency: "W"

using_exponent: True

ew_lookback: 25

cleaning: True

floor_at_zero: False

forward_fill_price_index: True

offdiag: 0.0

clip: 0.90

interval_frequency: 1M

Most of this what I usually do, but note the relatively short exponential weight lookback ew_lookback (a half life of 25 weeks, eg about 6 months), compared to an effectively infinite lookback for subsystem correlations. In terms of predicting the correlation of instrument returns, a shorter lookback makes sense as they are more unstable (think about the stock / bond correlation and how it changes between inflationary and non inflationary environments).

We don't floor_at_zero. I want to know if correlations are negative, unlike for trading subsystems where a negative correlation would result in an inflated IDM and unstable instrument weights. Remember I won't allow portfolio weights to change sign in the forward optimisation, which means I'm less concerned about 'spreading' effects.

However we do clip at 0.90. This means any correlation above 0.9 or below -0.9 will be clipped. This makes it less likely the optimisation will do something crazy.

We use cleaning which means we replace any nans (handy since we only calculate correlations once a month (interval_frequency: 1M) in the backtest [note: will need to be done every day in production], so we can start trading instruments that have just entered the dataset in the last couple of weeks). However any missing items in the off diagonal are replaced with offdiag =0.0 rather than the default of 0.99 (the diagonal is all 1 of course). Using 0.99 where we didn't have an estimate makes sense if a high correlation will penalise you, as for instrument weighting (where it will result in a lower weight for new instruments). But here it will just cause crazy behaviour, so using 0.0 makes more sense.

Here's (some) of the final correlation matrix:

c = system.expectedReturns.get_correlation_matrix()

c.subset(c.columns[:10]).as_pd().round(2)

AUD BUND COPPER CORN CRUDE_W EDOLLAR EUROSTX GAS_US GBP GOLD

AUD 1.00 -0.22 0.59 0.26 0.50 -0.24 0.64 -0.13 0.65 0.39

BUND -0.22 1.00 -0.23 -0.09 -0.32 0.64 -0.41 -0.01 -0.22 0.16

COPPER 0.59 -0.23 1.00 0.13 0.54 -0.52 0.50 -0.21 0.40 0.06

CORN 0.26 -0.09 0.13 1.00 0.37 -0.02 0.27 0.10 0.21 -0.10

CRUDE_W 0.50 -0.32 0.54 0.37 1.00 -0.44 0.64 0.15 0.34 -0.14

EDOLLAR -0.24 0.64 -0.52 -0.02 -0.44 1.00 -0.38 -0.07 -0.17 0.36

EUROSTX 0.64 -0.41 0.50 0.27 0.64 -0.38 1.00 -0.15 0.45 0.03

GAS_US -0.13 -0.01 -0.21 0.10 0.15 -0.07 -0.15 1.00 0.02 -0.30

GBP 0.65 -0.22 0.40 0.21 0.34 -0.17 0.45 0.02 1.00 0.41

GOLD 0.39 0.16 0.06 -0.10 -0.14 0.36 0.03 -0.30 0.41 1.00

Standard deviation is easy, since I already calculate this, but I just need to make sure they are in annualised % space

system.positionSize.calculate_daily_percentage_vol("SP500").tail(1)

index

2021-03-08 1.219869

# That means the vol is 1.22% a day

system.expectedReturns.annualised_percentage_vol("SP500").tail(1)

index

2021-03-08 0.195179

# This is 19.5% a year

# Here are some more:

system.expectedReturns.get_stdev_estimate()

{'AUD': 0.131, 'BUND': 0.0602, 'COPPER': 0.3313, 'CORN': 0.1492 ....

Now we can get the covariance matrix

system.expectedReturns.get_covariance_matrix()

AUD BUND COPPER ....

AUD 0.017342 -0.001743 0.025895 ....

The risk coefficient is just a scaling factor that won't affect our results, so I just used the default of economists everywhere 2.0:

small_system:

risk_aversion_coefficient: 2.0

Calculate expected returns

We're now ready to run the optimisation backwards to derive expected returns:

system.expectedReturns.get_implied_expected_returns()

{'AUD': 0.0267, 'BUND': 0.0101, 'COPPER': 0.0473, 'CORN': 0.0114

What do these numbers mean? Well take Corn. What this is saying is that if we did a portfolio optimisation with the covariance matrix above, and a risk aversion of 2.0, with an expected return for Corn of 1.14% per year (plus all the other expected returns) we'd get the portfolio weight we started with earlier: 0.22 of our portfolio.

This first forward optimisation function is very simple (stripping away some pysystemtrade gunk):

import numpy as np

def calculate_implied_expected_returns_given_np(aligned_weights_as_np: np.array,

covariance_as_np_array: np.array,

risk_aversion: float = 2.0):

expected_returns_as_np = risk_aversion*aligned_weights_as_np.dot(covariance_as_np_array)

return expected_returns_as_np

Per contract values

Before we run the forward optimisation, that will take account of integer contract sizing and various other constraints, we need to do some advanced work. First of all we need the value of a single contract in each future, expressed as a proportion of trading capital. Remember we already worked that out for Corn:

system.expectedReturns.get_per_contract_value_as_proportion_of_capital("CORN").tail(1)

index

2021-03-08 0.9625

This means we can only have a portfolio weight in Corn of 0, 0.9625, 2*0.9625 and so on representing 0,1, 2 ... whole contracts (and also on the short side). Here it is for some other instruments:

system.optimisedPositions.get_per_contract_value()

{'AUD': 3.0616, 'BUND': 8.147014864, 'COPPER': 4.072, 'CORN': 0.9625,

Maximum portfolio weights

This optimisation is going to be very slow, so we need to restrict the space we're working in as much as possible. Here are the maximum weights allowed (absolute, so this act as long and short constraints):

system.optimisedPositions.get_maximum_portfolio_weight_at_date()

{'AUD': 1.456, 'BUND': 3.182, 'COPPER': 0.578, 'CORN': 1.285

This means in practice we can't have a long or short of more than one contract in Corn: each contract is worth 0.9625 in portfolio weight units, but 2*0.0.9625 > 1.285. But where do these numbers like 1.285 come from?

Well first of all, we need to work out what the portfolio weight would be if we had 100% of our risk capital in a given instrument. I call this the risk multiplier. And it's equal to the ratio risk target / instrument risk.

Take Corn for example, which we know from earlier has an annualised percentage risk of 14.92%. The risk target default for my system is 20%:

system.config.percentage_vol_target

20.0

So the risk multiplier is going to be 20/14.92, or to be precise:

system.optimisedPositions.get_risk_multiplier_series("CORN").tail(1)

index

2021-03-08 1.339752

Now of course we're unlikely to want to have 100% of our portfolio risk in a given instrument, especially if we have 20 instruments to pick from.

I think the maximum risk you would want to take for a single instrument is:

- The ratio of maximum to average forecast (default 2.0)

- The IDM (capped at 2.5 at the end of this series)

- The current maximum instrument weight (9.6% at the end of the series)

- A 'risk shifting multiplier' (default 2.0) to reflect the fact you want to allow risk to shift between instruments as part of the optimisation

That comes to 96% of portfolio weight in this example.

Note that for very large portfolios of instruments, of the sort I'm planning to ultimately play with, this may result in numbers that are too small; since practically even if we have hundreds of instruments we might not be able to hold positions in all that many of them. For large numbers of instruments I would use a maximum risk which is the multiple of an arbitrary target (10%) multiplied by the ratio of maximum to average forecast (2.0), coming in at 20%.

Oh and you can configure this behaviour:

small_system:

max_risk_per_instrument:

risk_shifting_multiplier: 2.0

max_risk_per_instrument_for_large_instrument_count: 0.1

If we multiply 96% by the risk multipler of 1.34 for Corn we get 1.28: the maximum portfolio weight allowed for Corn.

Original portfolio weights

We're going to want the original, unoptimised, portfolio weights since one of my constraints is that we can't change signs between the backward and forward optimisations. As we've already seen:

system.optimisedPositions.original_portfolio_weights_for_relevant_date()

{'AUD': 0.0893, 'BUND': 0.6953, 'COPPER': 0.0472, 'CORN': 0.2198 ....

Previous portfolio weights

I'm going to apply a cost penalty to the optimisation, which means I need to know the previous portfolio weights (the change in weights will result in trades, and hence costs, which I want to penalise for - this is a substitute for the buffering method I currently use). In production these will be derived from my actual set of current positions, but in the backtest we will run the backtest forward day by day, using the previous days optimised weights.

Of course in the very first run of the backtest there won't be any previous weights, so we just seed with all zeros:

Here's a snippet from the optimised positions stage (system.optimisedPositions):

def get_optimised_weights_df(self) -> pd.DataFrame:

common_index = list(self.common_index())

# Start with no positions

previous_optimal_weights = portfolioWeights.allzeros(self.instrument_list())

weights_list = []

for relevant_date in common_index:

# pass the previous weights in

optimal_weights = self.get_optimal_weights_with_fixed_contract_values(relevant_date,

previous_weights=previous_optimal_weights)

weights_list.append(optimal_weights)

previous_optimal_weights = copy(optimal_weights)

Cost calculation

We want to know the costs of adjusting our positions; and since we're working in portfolio weight space we want the costs in that space as well. First of all we want the $ cost of trading each contract:

self = system.optimisedPositions

instrument_code = "CORN"

raw_cost_data = self.get_raw_cost_data(instrument_code)

instrumentCosts slippage 0.125000 block_commission 2.900000 percentage cost 0.000000 per trade commission 0.000000

multiplier = self.get_contract_multiplier(instrument_code)

50.0

last_price = self.get_final_price(instrument_code)

481.25

fx_rate = self.get_last_fx_rate(instrument_code)

1.0

cost_in_instr_ccy = raw_cost_data.calculate_cost_instrument_currency(1.0, multiplier, last_price)

9.15

cost_in_base_ccy = fx_rate * cost_in_instr_ccy

9.15

Now we translate that to a proportion of capital and (optionally) apply a cost multiplier:

cost_per_contract = self.get_cost_per_contract_in_base_ccy(instrument_code)

trading_capital = self.get_trading_capital()

cost_multiplier = self.cost_multiplier()

cost_multiplier * cost_per_contract / trading_capital

0.000366

$9.15 is 0.0366% of $25000. And we can configure the multiplier in the backtest .yaml file:

small_system:

cost_multiplier: 1.0

We now need to translate that into the cost of adjusting our portfolio weight by 100%, which will depend on the size of the contract. Remember that a Corn contract is worth 0.9625 of our portfolio value. So to adjust our portfolio weight by 100% would cost 0.000366 / 0.9625 = 0.00038.

But we're going to be calculating the expected return in annualised terms and subtracting the cost of trading. So we need to annualise this figure. Our frequency of trading will vary but for simplicity I will assume I trade once a month (roughly the average holding period I use), so we multiply this figure by 12: 0.00038 * 12 = 0.00456.

Maximum risk constraint

We're nearly there! In my current system I use an

exogenous risk constraint to ensure my expected risk on any given day can never be twice my target risk (20%). Since I'm doing an optimisation, it seems to make sense to include this as well. Naturally this can be configured:

small_system:

max_risk_ceiling_as_fraction_normal_risk: 2.0

So with a target risk of 20%, the maximum risk can be 20%*2 = 40%. As I'll be using variance in my optimisation, it makes sense to precalculate this as a variance limit (which will be 0.4^2 = 0.16):

system.optimisedPositions.get_max_risk_as_variance()

0.16000000

Amongst other things, this will allow me to use a higher IDM in my production system without being quite as concerned about my peak risk.

The forward optimisation: Creating the grid

We now have everything we need! First we need to create the grid, as this will be a brute force optimisation. The code is

here.

First we need to generate the constraints which will determine the limits of the grid.

The constraints we're using are:

- Maximum risk capital in a single market

- Positions can't change sign from the original, unoptimised weights.

There are a couple of other constraints I discussed last time that I won't be testing in this post, since they relate to the production system:

- A reduce only list of instruments (which could be instruments that are currently too illiquid or expensive to trade)

- A no trade list of instruments

Here are the constraints for the first few instruments (AUD, BUND, COPPER, CORN). These are all long markets, so the constraints are just a lower bound of zero, and an upper bound from the maximum risk per instrument:

[(0.0, 1.4564), (0.0, 3.1825), (0.0, 0.5788), (0.0, 1.2848),...

We can confirm the upper bounds are the same as the maximum risk weights:

system.optimisedPositions.get_maximum_portfolio_weight_at_date()

{'AUD': 1.456, 'BUND': 3.182, 'COPPER': 0.578, 'CORN': 1.285

We're now ready to generate the grid. We do this in jumps of per_contract_value (so 0.9625 for Corn) making sure we don't break the constraints.

Here's the grid points for the first few instruments (AUD, BUND, COPPER, CORN, CRUDE_W and EDOLLAR):

[[0.0], [0.0], [0.0], [0.0, 0.9625], [0.0], [0.0, 9.886, 19.772]....

(Note we haven't defined the grid completely yet, just the points on each dimension)

For Corn the grid points are [0.0, 0.9625] which correspond to being long 0 or 1 contracts, as we already noted above. For Eurodollar, we can be long 0, 1 or 2 contracts. Not shown, but we can also hold MXP (0 or 1), US 2 year (0 to 4 contracts), and V2X (short 0,1, or 2 contracts).

For AUD, BUND, COPPER, CRUDE_W and all the other markets: we can't take any positions. Even a single contract would exceed the maximum risk limits. These markets are just too big compared to our capital.

Now that's partly because I've deliberately starved this portfolio of capital to make it a more extreme example (it also speeds up the optimisation!). However I fully expect there to be instruments in my actual production portfolio which I never hold positions in. But this is fine. We've got an expected return for them, and that will be used to inform our positions in the markets we will trade. For example we want to be long US10 year bonds. All other things being equal, we'll go longer US2 year and Eurodollar futures.

To actually generate all the possible places on the grid we use itertools:

grid_possibles = itertools.product(*grid_points)

The forward optimisation: The value function

Here's the final value function. We want to minimise this, so it returns a negative expected annual return, adjusted for a cost and variance penalty (there's no reason why we shouldn't maximise, it's just for consistency with my other optimisation functions).

def neg_return_with_risk_penalty_and_costs(weights: list,

optimisation_parameters: optimisationParameters)\

-> gridSearchResults:

weights = np.array(weights)

risk_aversion = optimisation_parameters.risk_aversion

covariance_as_np = optimisation_parameters.covariance_as_np

max_risk_as_variance = optimisation_parameters.max_risk_as_variance

variance_estimate = float(variance(weights, covariance_as_np))

if variance_estimate > max_risk_as_variance:

return gridSearchResults(value = SUBOPTIMAL_PORTFOLIO_VALUE, weights=weights)

risk_penalty = risk_aversion * variance_estimate /2.0

mus = optimisation_parameters.mus

estreturn = float(weights.dot(mus))

cost_penalty = _calculate_cost_penalty(weights, optimisation_parameters)

value_to_minimise = -(estreturn - risk_penalty - cost_penalty)

result = gridSearchResults(value = value_to_minimise,

weights=weights)

return result

def _calculate_cost_penalty(weights: np.array,

optimisation_parameters: optimisationParameters):

cost_as_np_in_portfolio_weight_terms= optimisation_parameters.cost_as_np_in_portfolio_weight_terms

previous_weights_as_np = optimisation_parameters.previous_weights_as_np

if previous_weights_as_np is arg_not_supplied:

cost_penalty = 0.0

else:

change_in_weights = weights - previous_weights_as_np

trade_size = abs(change_in_weights)

cost_penalty = np.nansum(cost_as_np_in_portfolio_weight_terms * trade_size)

return cost_penalty

Note also that if the variance is higher than our maximum (0.16, derived from a standard deviation limit of 40%) we return a massively positive number to ensure this grid point isn't selected.

We return both the value and the weights, since itertools is a generator we won't know which weights generated the best (lowest) value unless they are returned with each other.

The forward optimisation: to process Pool, or not process Pool

All we need to do know is apply the value function to all possible elements in the grid. We can do this using a parallel pool, or with just a vanilla map function:

if use_process_pool:

with ProcessPoolExecutor(max_workers = 8) as pool:

results = pool.map(

neg_return_with_risk_penalty_and_costs,

grid_possibles,

itertools.repeat(optimisation_parameters),

)

else:

results = map(neg_return_with_risk_penalty_and_costs,

grid_possibles,

itertools.repeat(optimisation_parameters))

results = list(results)

list_of_values = [result.value for result in results]

optimal_value_index = list_of_values.index(min(list_of_values))

optimal_weights_as_list = results[optimal_value_index].weights

max_workers works best when set to the number of CPU cores you have (I have 8).

The optimal weights we return are just the grid points at which the value function is minimised.

Results of a single optimisation

Here's the result of our optimisation. The first column is the original portfolio weights, and the second is the optimised weights:

AUD 0.089396 0.000000

BUND 0.695372 0.000000

COPPER 0.047298 0.000000

CORN 0.219855 0.000000

CRUDE_W 0.080358 0.000000

EDOLLAR 2.128404 9.886000

EUROSTX 0.157784 0.000000

GAS_US -0.116683 0.000000

GBP 0.290223 0.000000

GOLD -0.097014 0.000000

LEANHOG -0.045693 0.000000

LIVECOW -0.155092 0.000000

MXP 0.210729 0.930000

OAT 0.778399 0.000000

SP500 0.076195 0.000000

US10 0.300379 0.000000

US2 4.941952 8.827813

V2X -0.030930 -0.116107

VIX -0.007675 0.000000

WHEAT 0.008440 0.000000

This might make more sense in contract space:

original optimised buffered

AUD 0.03 0.0 0.0

BUND 0.09 0.0 0.0

COPPER 0.01 0.0 0.0

CORN 0.23 0.0 0.0

CRUDE_W 0.03 0.0 0.0

EDOLLAR 0.22 1.0 0.0

EUROSTX 0.09 0.0 0.0

GAS_US -0.11 0.0 0.0

GBP 0.08 0.0 0.0

GOLD -0.01 0.0 0.0

LEANHOG -0.03 0.0 0.0

LIVECOW -0.08 0.0 0.0

MXP 0.23 1.0 0.0

OAT 0.10 0.0 0.0

SP500 0.01 0.0 0.0

US10 0.06 0.0 0.0

US2 0.56 1.0 1.0

V2X -0.27 0.0 0.0

VIX -0.01 -1.0 0.0

WHEAT 0.01 0.0 0.0

The first column are the original desired positions in contract space, and the latter are those positions rounded so it's what the system would hold without any optimisation: just a single US 2 year futures contract.

The second column is the optimised contract position.

Remember the instruments we could take positions in were: Corn, Eurodollar, MXP, US 2 year, and V2X. We haven't got a position in Corn, but our desired position is just 0.23 of a contract. We just aren't that confident in Corn. However we've taken a full contract position in Eurodollar even though it has a desired position of just 0.22, probably because some risk has been displaced from the other bond markets we can't hold anything in but would also want to be long. We're also shorter in V2X, again probably because the desired long stock and short VIX position have been displaced here. And this may also be true for MXP.

Position targeting

It's quite useful - I think - to look at a time series of positions (original unrounded, rounded & buffered, and optimised using a cost penalty). This will give us a feel for how well our positions are tracking the original positions, and for how the cost penalty compares with using the buffer.

instrument_code = "CORN"

x = system.portfolio.get_notional_position(instrument_code)

y = system.risk.get_original_buffered_rounded_position_for_instrument(instrument_code)

z = system.optimisedPositions.get_optimised_position_df()[instrument_code]

to_plot = pd.concat([x,y,z], axis=1)

to_plot.columns = ["original", "rounded", "optimal"]

to_plot.plot()

You can see that in the early days, when we have fewer markets, things are tracking pretty well for both the rounded and optimal position. Let's zoom in on the early 2000's

The rounded position flips between 0 and -1 as the original position moves around -0.5, but the optimised position is more steadfast holding a constant short. And in 2000 it goes more dramatically short, partly reflecting a stronger signal, but also displacing some risk from other instruments.

More recently:

Here it's the optimisation that is trading more.

If we look at the annualised turnover for the rounded and optimised positions respectively we get the following:

list_of_instruments = system.get_instrument_list()

from syscore.pdutils import turnover

for instrument_code in list_of_instruments:

natural_position = system.portfolio.get_notional_position(instrument_code)

norm_pos = natural_position.abs().mean()

rounded_position = system.risk.get_original_buffered_rounded_position_for_instrument(instrument_code)

optimised_position = system.optimisedPositions.get_optimised_position_df()[instrument_code]

rounded_position_turnover = turnover(rounded_position, norm_pos)

optimised_position_turnover = turnover(optimised_position, norm_pos)

print("%s Rounded %.1f optimised %.1f" % (instrument_code, rounded_position_turnover, optimised_position_turnover))

AUD Rounded 2.1 optimised 2.4

BUND Rounded 0.0 optimised 0.0

COPPER Rounded 1.4 optimised 0.0

CORN Rounded 3.9 optimised 4.9

CRUDE_W Rounded 5.1 optimised 1.3

EDOLLAR Rounded 4.6 optimised 9.4

EUROSTX Rounded 5.1 optimised 1.8

GAS_US Rounded 3.4 optimised 1.4

GBP Rounded 1.1 optimised 1.5

GOLD Rounded 6.0 optimised 3.0

LEANHOG Rounded 4.2 optimised 2.2

LIVECOW Rounded 5.2 optimised 3.0

MXP Rounded 5.6 optimised 2.8

OAT Rounded 0.0 optimised 0.0

SP500 Rounded 0.0 optimised 0.0

US10 Rounded 4.8 optimised 2.3

US2 Rounded 4.0 optimised 1.5

V2X Rounded 4.9 optimised 1.3

VIX Rounded 0.0 optimised 0.0

WHEAT Rounded 4.1 optimised 1.3

These turnover figures are all quite low, because of the 'lumpiness' of positions you will get with insufficient capital. But there is no evidence that the optimisation is systematically increasing turnover (and thus costs) compared to the simpler method of using buffering on rounded positions. If anything turnover is on average a little lower in the optimised positions.

Risk targeting

Now let's see how well the optimisation targets risk. We know from

my earlier posts that risk varies in this kind of system for a couple of reasons: because forecasts are varying in strength (which is good!) and because of this rather ugly thing called the "relative correlation factor", which exists because the vanilla trading system doesn't use current instrument return correlations or account for current positions in determining risk scaling. However the new optimisation code will do this.

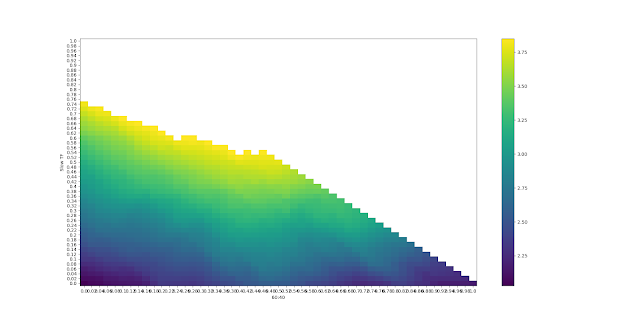

Let's plot the expected risk of our portfolio, with the original positions, rounded & buffered, and optimised.

x = system.risk.get_portfolio_risk_for_original_positions()

y = system.risk.get_portfolio_risk_for_original_positions_rounded_buffered()

z = system.risk.get_portfolio_risk_for_optimised_positions()

all_risk = pd.concat([x,y,z], axis=1)

all_risk.columns = ['original', 'rounded', 'optimised']

all_risk.plot()

That's quite noisy and hard to see. But in terms of summary statistics, the median value of expected risk is 20.9% in the original system, 14% in the rounded system, and 18.6% in the optimised system. The rounding means we sometimes have zero risk - as you can see from the orange line frequently hitting zero - which results in a structural undershooting.

Let's zoom into the more recent times:

You can see that the rounded positions are struggling to achieve very much. The optimised positions are doing better, and also are showing a better correlation with the original expected risk.

(The original required risk is much smoother, since it can cotinously adjust it's volatility every day, hence the only source of variation are relatively slow forecasts and changes in correlations).

Performance

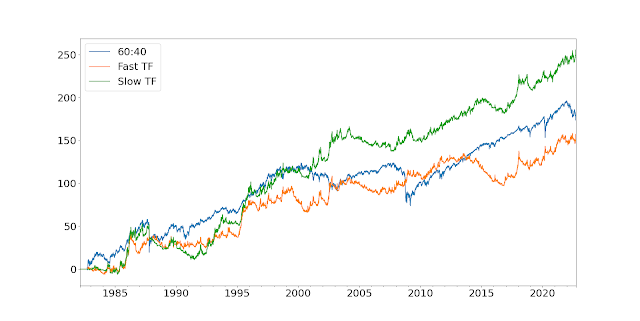

As regular readers know I don't place as much emphasis on using backtested performance as many other quant traders do. I like to look at performance last, to avoid any temptation to do some implicit fitting. Still, let's wheel out some account curves.

That doesn't look great, but bear in mind that the rounded portfolio has lower risk and things are even worse in terms of Sharpe Ratios: Original over 1.0, Rounded around 0.72, optimised just 0.56.

Ouch. Now this could be just bad luck, and down to the combination of instruments and cash I happen to be using. Really I should try this with a more serious test, such as the 40 odd instruments I currently trade and my current trading capital (~$500K)

But the fact is it takes a long time to backtest this thing. I've nearly killed a few computers trying to test 20 instruments with $100,000 in capital. And the more capital and the more instruments you have, the more possible points on the grid, and the slower the whole thing runs.

Conclusion

This was a cool idea! And I enjoyed writing the code, and learning a few things about doing more efficient grid searches in Python.

But it doesn't seem to add any value compared to the much simpler approach of just trading everything and rounding the positions. And for such a hugely complex additional process, it needed to add significant value to make it worth doing.

In the

next post I'll try another approach:

using a formal 'static' optimisation to select the best group of instruments to trade for a given amount of capital.