Do you remember this post? https://qoppac.blogspot.com/2022/06/vol-targeting-cagr-race.html

Here I introduced a performance metric, the best annualised compounding return at the optimal leverage level for that strategy. This is equivalent to finding the highest geometric return once a strategy is run at it's Kelly optimal leverage.

I've since played with that idea a bit, for example in this more recent post amongst others I considered the implications of that if we have different tolerances for uncertainty and used bootstrapping of returns when optimising a stock and bond portfolio with leverage, whilst this post from last month does the same exercise in a bitcoin/equity portfolio without leverage.

In this post I return to the idea of focusing on this performance metric - the maximised geometric mean at optimal leverage, but now I'm going to take a more abstract view to try and get a feel for in general what sort of strategies are likely to be favoured when we use this performance metric. In particular I'd like to return to the theme of the original post, which is the effect that skew and other return moments have on the maximum achievable geometric mean.

Obvious implications here are in comparing different types of strategies, such as trend following versus convergent strategies like relative value or option selling; or even 'classic' trend following versus other kinds.

Some high school maths

(Note I use 'high school' in honor of my US readers, and 'maths' for my British fans)

To kick off let's make the very heroric assumption of Gaussian returns, and assume we're working at the 'maximum Kelly' point which means we want to maximise the median of our final wealth distribution - same as aiming for maximum geometric return - and are indifferent to how much risk such a portfolio might generate.

Let's start with the easy case where we assume the risk free rate is zero; which also implies we pay no interest for borrowing (apologies I've just copied and pasted in great gobs of LaTeX output since it's easier than inserting each formula manually):

Now that is a very nice intuitive result!

Trivially then if we can use as much leverage as we like, and we are happy to run at full Kelly, and our returns are Gaussian, then we should choose the strategy with the highest Sharpe Ratio. What's more if we can double our Sharpe Ratio, we will quadruple our Geometric mean!

Truely the Sharpe Ratio is the one ratio to rule them all!

Now let's throw in the risk free rate:

We have the original term from before (although the SR now deducts the risk free rate), and we add on the risk free rate reflecting the fact that the SR deducts it, so we add it back on again to get our total return. Note that the higher SR = higher geometric mean at optimal leverage relationship is still true. Even with a positive risk free rate we still want to choose the strategy with the highest Sharpe Ratio!Consider for example a classic CTA type strategy with 10% annualised return and 20% standard deviation, a miserly SR of 0.5 with no risk free rate; and contrast with a relative value fixed income strategy that earns 6.5% annualised return with 6% standard deviation, a SR of 1.0833

Now if the risk free rate is zero we would prefer the second strategy as it has a higher SR, and indeed should return a much higher geometric mean (since the SR is more than double, it should be over four times higher). Let's check. The optimal leverages are 2.5 times and 18.1 (!) times respectively. At those leverages the arithmetic means are 25% and 117% respectively, and the geometric means using the approximation are 12.5% for the CTA and 58.7% for the RV strategy.

But what if the risk free rate was 5%? Our Sharpe ratios are now equal: both are 0.25. The optimal leverages are also lower, 1.25 and 4.17. The arithmetic means come in at 12.5% and 27.1%, with geometric means of 9.4% and 24%. However we have to include the cost of interest; which is just 1.25% for the CTA strategy (borrowing just a quarter of it's capital at a cost of 5% remember) but a massive 15.8% for the RV. Factoring those in the net geometric means drop to 8.125% for both strategies - we should be indifferent between them, which makes sense as they have equal SR.

The horror of higher moments

Now there is a lot wrong with this analysis. We'll put aside the uncertainty around being able to measure exactly what the Sharpe Ratio of a strategy is likely to be (which I can deal with by drawing off more conservative points of the return distribution, as I have done in several previous posts), and the assumption that returns will be the same in the future. But that still leaves us with the big problem that returns are not Gaussian! In particular a CTA strategy is likely to have positive skew, whilst an RV variant is more likely to be a negatively skewed beast, both with fat tails in excess of what a Gaussian model would deliver. In truth in the stylised example above I'd much prefer to run the CTA strategy rather than a quadruple leveraged RV strategy with horrible left tail properties.

Big negative skewed strategies tend to have worse one day losses; or crap VAR if you prefer that particular measure. The downside of using high leverage is that we will be saddled with a large loss on a day when we have high leverage, which will significantly reduce our geometric mean.

There are known ways to deal with modifying the geometric mean calculation to deal with higher moments like skew and kurtosis. But my aim in this post is to keep things simple; and I'd also rather not use the actual estimate of kurtosis from historic data since it has large sampling error and may underestimate the true horror of a bad day that can happen in the future (the so called 'peso problem'); I also don't find the figures for kurtosis particuarly intuitive.

Instead let's turn to the tail ratio, which I defined in my latest book AFTS. A lower tail ratio of 1.0 means that that the left tail is Gaussian in size, whilst a higher ratio implies a fatter left tail.

I'm going to struggle to rewrite the relatively simple 0.5SR^2 formulae to include a skew and left tail term, which in case will require me to make some distributional assumptions. Instead I'm going to use some bootstrapping to generate some distributions, measure the tail properties, find the optimal leverage, and then work out the geometric return at the optimal leverage point. We can then plot maximal geometric means against empirical tail ratios to get a feel for what sort of effect these have.

Setup

To generate distributions with different tail properties I will use a mixture of two Gaussian distributions; including one tail distribution with a different mean and standard deviation* which we draw from with some probability<0.5. It will then be straightforward to adjust the first two moments of the sample distribution of returns to equalise Sharpe Ratio so we are comparing like with like.

* you will recognise this as the 'normal/bliss' regime approach used in the paper I discussed in my prior post around optimal crypto allocation, although of course it will only be bliss if the tail is nicer which won't be the case half the time.

As a starting point then my main return distribution will have daily standard deviation 1% and mean 0.04% which gives me an annualised SR of 0.64, and will be 2500 observations (about 10 years) in length - running with different numbers won't affect things very much. For each sample I will draw the probability of a tail distribution from a uniform distribution between 1% and 20%, and the tail distribution daily mean from uniform [-5%, 5%], and for the tail standard deviation I will use 3% (three times the normal).

All this is just to give me a series of different return distributions with varying skew and tail properties. I can then ex-post adjust the first two moments so I'm hitting them dead on, so the mean, standard deviation and SR are identical for all my sample runs. The target standard deviation is 16% a year, and the target SR is 0.64, all of which means that if the returns are Gaussian we'd get a maximum leverage of 4.0 times.

As always with these things it's probably easier to look at code, which is here (just vanilla python only requirements are pandas/numpy).

Results

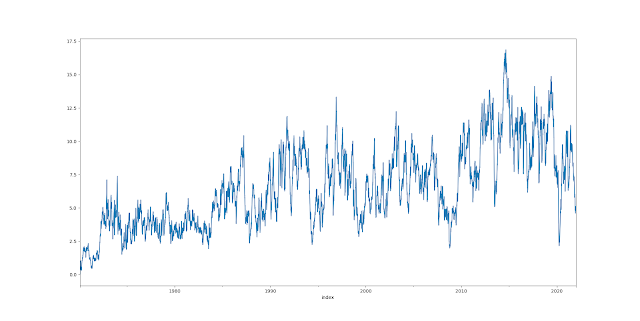

Let's start with looking at optimal leverage. Firstly, how does this vary with skew?

.jpg)