Regular listeners to the podcast I ocasionally co-host will know that I enjoy some light hearted banter with some of my fellow podcasters, many of whom describe themselves as 'pure' trend followers, whilst I am an apostate who deserves to be cast into the outer darkness. My (main) sin? The use of 'vol targeting', an evil methodology not to be found in the original texts of trend following, or even in the apocrypha, and thus making me unworthy. In brief, vol targeting involves adjusting the size of a trade if volatility changes during the period that you are holding it for. A real trend follower would maintain the position in the original size.

(But we agree that the initial position should be sized according to the risk when the trade is entered into)

I've briefly discussed this subject before, but I thought it might be worthwhile to have another look. In particular, the pure trendies generally object to my use of Sharpe Ratios (SR) as a method for evaulating trading performance. And you know what - they are right. It doesn't make much sense to use SR when two or more trading strategies have different return distributions. And it's well known that purer trend following has a more positive skew than the vol targeted alternative, although that effect isn't as large when you look at returns as you might expect. Also, for what it's worth, purer trend following has fatter tails, both left and right.

The reason I use Sharpe Ratio of course is that it's a risk adjusted return, which makes in invariant to the amount of leverage. So if we're not going to use risk adjusted returns, then what? I'm going to do the following, and pick the strategy with the best annualised compounding return at the optimal leverage level for that strategy. Because positively skewed strategies can have higher leverage than those with less skew (see my first book Systematic Trading for some evidence), this will flatter the purer form of trend following.

** EDIT 29th June - added 3 slower strategies and turnover statistics **

The systems

What I'm trying to do here is to isolate the effect of vol targeting by making the trading systems as close as possible to each other.

Each of the alternatives will use a single kind of moving average crossover trading rule(s), but the way they are used will be rather different:

- 1E1S'One entry, one stop' (binary, no vol targeting). We enter a trade with a full sized fixed position when the crossover goes long or short, and we exit when we hit a trailing stop loss. We reenter the trade when the crossover has changed signs. The size of the stop loss is calibrated to match the turnover of the entry rule (see here and here). The stop loss gap remains fixed throughout the life of the trade, as does the position size. This is basically the starter system in chapter six of Leveraged Trading.

- 1E1E 'One entry, one exit' (binary, no vol targeting). We enter a trade with a full sized fixed position when the crossover goes long or short, and we exit when the crossover has changed signs, immediately opening a new position in the opposite direction. The position size remains unchanged. This is the system in chapter nine of Leveraged Trading.

- BV 'Binary with vol targeting' (binary, vol targeting). We enter a trade with a full sized position when the crossover goes long or short, and we exit when the crossover has changed signs. Whilst holding the trade we adjust our positions according to changes in volatility, using a buffer to reduce trading costs.

- FV 'Forecasts and vol targeting' (continous, vol targeting). We hold a position that is proportional to a forecast of risk adjutsed returns (the size of the crossover divided by volatility), and is adjusted for current volatility, using a buffer to reduce changing costs. This is my standard trading model, which is in the final part of Leveraged Trading and also in Systematic Trading.

I've included both FV and BV to demonstrate that any difference isn't purely down to the use of forecasting, but is because of vol targeting. Similarly, I've included both 1E1E and 1E1S to show that it's the vol targeting that's creating any differences, not just the use of a particular exit methodology.

If you forget which is which then the things with numbers next to them are not vol targeted (1E1E, 1E1S), and the pure letter acronyms which include the letter V are vol targeted (FV, BV).

It's probably easier to visualise the above with a picture; here's a plot of the position held in S&P 500 using each of the different methods, all based on the EWMAC64,256 opening rule.

Notice how the 1E1S and 1E1E strategies hold their position in lots fixed in between trades; they don't match exactly since they are using different closing rules, eg in October 2021 the 1E1E goes short because the forecast switches sign, but the 1E1S remains long as the stop hasn't been hit.

The binary forecast (red BV) holds a steady long or short in risk terms, but adjusts this according to vol. Finally the continous forecast (green FV) starts off with a small position which is then built up as the trend continues, and cut when the trend fades

I will do this exercise for the following EWMAC trading rules: EWMAC8,32; EWMAC16,64; EWMAC32,128; EWMAC64,256. I'm excluding the fastest two rules I trade, since they can't be traded by a lot of instruments due to excessive costs. The skew is higher the faster you trade, so this will give us some interesting results. If I refer to EWMACN, that's my shorthand for the rule EWMACN,4N.

I will also look at the joint performance of an equally weighted system with all of the above (EWMACX). In the case of the joint performance, I equally weight the individual forecasts, before forming a combined forecast and then follow the rules above.

For my set of instruments, I will use the Jumbo portfolio. This is discussed in my new book (still being written), but it's a set of 100 futures markets which I've chosen to be liquid and not too expensive to trade. For instrument weights, I use the handcrafted weights for my own trading system, excluding instruments that I have in my system which aren't in the Jumbo portfolio. The results won't be significantly affected by using a different set of instruments or instrument weights.

Notional capital is $100 million; this is to avoid any rounding errors on positions making a big difference; for example if capital was too small then BV would look an awful lot like 1E1E.

Basic statistics

Let's begin with some basic statistics; first realised standard deviation:

FV BV 1E1S 1E1E

ewmac4 23.2 17.0 14.3 18.4

ewmac8 23.3 16.8 13.9 19.2

ewmac16 23.4 16.8 13.8 22.8

ewmac32 23.3 16.8 13.0 26.8

ewmac64 23.0 16.8 13.5 30.8

ewmacx 21.5 16.8 13.2 23.1

I ran all these systems with a 20% standard deviation target. However, because that kind of target only makes sense with the 'FV' type of strategy (which ends up slightly overshooting, as it happens), the others are a bit all over the shop. Already we can see it's going to be unfair to compare these different strategy variations.

Next, the mean annual return:

FV BV 1E1S 1E1E

ewmac4 20.2 13.8 11.9 14.3

ewmac8 24.8 17.0 14.1 17.5

ewmac16 26.0 18.5 11.9 20.4

ewmac32 25.4 18.8 9.3 22.4

ewmac64 23.2 16.7 10.3 19.3

ewmacx 25.3 19.4 11.6 20.3

'FV' looks better than the alternatives, but again it also has the highest standard deviation so that isn't a huge surprise. To correct for that - although we know it's not ideal - let's look at risk adjusted returns using the much maligned Sharpe Ratio, with zero risk free rate (which as futures traders is appropriate):

FV BV 1E1S 1E1E

ewmac4 0.87 0.81 0.83 0.78

ewmac8 1.06 1.01 1.01 0.91

ewmac16 1.11 1.10 0.86 0.89

ewmac32 1.09 1.12 0.72 0.83

ewmac64 1.00 0.99 0.76 0.62

ewmacx 1.18 1.15 0.88 0.88

FV is a little better than BV especially for faster opening rules, but both are superior to the non vol targeted alternatives. Notice that as we speed up, the improvement generated by vol targeting shrinks. This makes sense as if you're holding a position for only a week or so then it won't make difference if you adjust that position for volatility over such a short period of time.

(In the limit: a holding period of a single day with daily trading, both BV and 1E1E would be identical.)

What about costs?

FV BV 1E1S 1E1E

ewmac4 -3.4 -2.6 -1.3 -2.5

ewmac8 -1.9 -1.6 -0.6 -1.4

ewmac16 -1.2 -1.0 -0.5 -0.9

ewmac32 -0.9 -0.7 -0.4 -0.6

ewmac64 -0.8 -0.6 -0.3 -0.5

ewmacx -1.1 -1.0 -0.4 -0.9

Obviously FV and BV are a little more expensive, because they trade more often; and of course faster trading rules are always more expensive regarddless of what you do with them.

How 'bout monthly skew?

FV BV 1E1S 1E1E

ewmac4 2.14 1.27 1.54 1.67

ewmac8 1.72 0.86 2.14 1.00

ewmac16 1.26 0.70 1.84 1.00

ewmac32 0.88 0.62 0.87 -0.73

ewmac64 0.71 0.47 0.87 -3.21

ewmacx 1.41 0.78 1.98 0.47

We already know from my previous research that return skew is a little better when you drop vol targeting, and that's certainly true of 1E1S. Something weird is going on with 1E1E; it turns out to be a couple of rogue days, and is an excellent example of why skew isn't a very robust statistic as it is badly affected by outliers.

Now consider the lower tail ratio. This is a statistic that I recently invented as an alternative to skew. It's more fully explained in my new book (coming out early 2023), but for now a figure of 1 means that the 5% left tail of the distribution is Gaussian. A higher number means the left tail is fatter than Gaussian, and the higher the number the less Gaussian it is. So lower is good.

FV BV 1E1S 1E1E

ewmac4 1.79 1.46 1.50 1.58

ewmac8 1.80 1.45 1.54 1.63

ewmac16 1.83 1.46 1.60 1.97

ewmac32 1.82 1.42 1.70 2.40

ewmac64 1.81 1.49 1.83 2.71

ewmacx 1.95 1.47 1.62 2.01

Again, 1E1S has the nicest left tail, although interestingly BV is better than anything and 1E1E is worse, suggesting this might not be a vol targeting story.

Max drawdown anyone?

FV BV 1E1S 1E1E

ewmac4 -64.2 -47.6 -31.4 -49.2

ewmac8 -45.7 -40.5 -33.5 -46.0

ewmac16 -41.6 -27.8 -23.0 -122.0

ewmac32 -75.6 -44.5 -38.8 -191.7

ewmac64 -85.8 -48.7 -44.7 -297.9

ewmacx -42.6 -36.1 -35.6 -115.6

Note that a max drawdown of over 100% is possible because these are non compounded returns. With compounded returns the numbers would be smaller, but the relative figures would be the same.

And finally, the figure we're focused on, the CAGR:

FV BV 1E1S 1E1E

ewmac4 19.1 13.2 11.5 13.5

ewmac8 24.7 16.9 14.0 17.0

ewmac16 26.2 18.7 11.5 19.5

ewmac32 25.5 19.0 8.8 20.6

ewmac64 22.8 16.5 9.8 15.6

ewmacx 25.8 19.7 11.3 19.3

The effect of relative leverage

On the face of it then we should go with the FV system, which handily is what I trade myself. The BV system isn't quite as good, but it is better than eithier of the non vol targeted systems. For pretty much every statistic we have looked at, with the exception of costs and skew, there is no reason you'd go for eithier 1E1S or 1E1E over the vol targeted alternatives.

But it is hard to compare these strategies with any single statistic. They have different characteristics. Different distributions of returns. Different standard deviations. Different skews. Different tails.

How can we try and do a fairer comparision? Well, we can very easily run these strategies with different relative leverage. This is a futures portfolio, so we are already using some degree of leverage, but within reason we can choose to multiply all our positions by some number N which will result in higher or lower leverage than we started with. Doing this will change most of the statistics above.

Let's think about how the statistics above change with changes to leverage. First of all, the dull ones:

- Sharpe ratio: Invariant to leverage

- Skew: Invariant to leverage

- Left tail ratio: Invariant to leverage

Then the easy ones:

- Annual mean: Linearly increases with leverage

- Annual standard deviation: Linearly increases with leverage

- Costs: Linearly increases with leverage

- Drawdown: Linearly increases with leverage

OK, so applying leverage won't change the relative rankings of those statistics, so let's consider the one that does:

- CAGR: Non linear; will increase with more leverage, then peak and start to fall.

WTF? Time for some theory.

Interlude: Leverage and CAGR and Kelly

CAGR, also known as annualised geometric returns, behaves differently to other kinds of statistics, and thus is sensitive to the distribution of returns. Arithmetic mean will scale with leverage*, as will standard deviation, which means that Sharpe Ratios are invariant. But geometric returns don't do that. They increase with leverage, but in a non linear way. And at some point, adding leverage actually reduces geometric returns. There is an optimal amount of relative leverage where we will maximise CAGR. Alternatively, this can be expressed as an optimal risk target, measured as an annualised standard deviation of returns.

* Strictly speaking it's excess mean returns that scale with leverage, but with futures we can assume the risk free rate is zero

If your returns are Gaussian, then the optimal annualised standard deviation will (neatly!) be equal to the Sharpe Ratio. This is another way of stating the famous Kelly criteria (discussed in several blog posts, including here, and also in all my books).

But in a non Gaussian world, CAGR will change differently according to the character of the strategy, and this Kelly result will not hold. Consider a negatively skewed strategy, with mostly positive returns, and one massive negative return - a day when we lose 50%. It might have a sufficiently good SR that the optimal Gaussian leverage is 5. But applying leverage of 2 or more times to that strategy will put the CAGR at zero! Conversely, a positively skewed strategy which loses 1% every day and has one massive positive return will require leverage of 100 or more times to hit a zero CAGR.

What this means is that negatively skewed strategies can't be run at such a high relative leverage before they get to the point of their maximum CAGR, which is also their Kelly optimal level; and beyond that level they will see a sharper reduction in their CAGR. There is a graph in my first book, Systematic Trading, which shows this happening:

|

| Y-axis is geometric return, X-axis is target annualised standard deviation. Each line shows a strategy with different skew. The optimum risk target is higher for positively skewed strategies |

Another way of thinking about this, is that to approximate geometric mean calculations for a normal distribution you can use the approximation:

m - 0.5s^2

Where m is the arithmetic mean, and s is the standard deviation. But this won't work for a negatively skewed strategy! That will have a lower geometric mean than what is given by the approximation. And a positively skewed strategy will have a higher GM (see here for details).

Consider then in general terms the following two alternatives:

A- Something with a better Sharpe Ratio, but worse skew

B- Something with a worse Sharpe Ratio, but better skew

It might help to think of a pair of concrete examples, not related to vol targeting. Option A could be a equity neutral strategy, which with it's starting leverage has a very low standard deviation but nasty skew. Let's say it returns 5% arithmetic mean with 5% standard deviation. The CAGR will be a little below 4.9% (using the approximation above, knocking something off for negative skew).

Option B is some trend following strategy (with or without vol targeting - you choose!) with a 15% arithmetic mean and 20% standard deviation, but with positive skew. This is a worse Sharpe Ratio. But without applying any leverage it has the better CAGR. It will be a little above 13% (adding something on for positive skew).

What happens when we increase leverage? To begin with, option A will look better. It might be that we can apply double relative leverage, which will give us a CAGR just below 9.5%. With triple relative leverage, if the skew isn't too bad, we can get a CAGR of just under 14%, which will be better than option B.

However we can also increase the leverage on option B. With double leverage it would have a CAGR of just over 22%.

We can keep down this path, and if both A and B were Gaussian normal with zero skew, then strategy A would achieve maximum CAGR with 20x leverage (!) and a standard deviation of 100% (!!), for a CAGR of 50%; whilst strategy B would have maximum CAGR with relative leverage of 3.75, and a standard deviation of 75%, for a CAGR of just over 28%.

But they aren't Gaussian normal, so at some point before then it's quite likely that the negative skew of strategy A will start to cause serious problems. For example if the worst loss on strategy A was 10%, then it would hit zero CAGR for all relative leverage above x10, and it's optimal leverage and maximum CAGR would be considerably less than the 20x in the Gaussian case. Conversely, strategy B is unlikely to hit the point of ruin until much later.

The upshot of this is: In a CAGR fight between two strategies, the winner will be the one whose skew & kurtosis / sharpe ratio trade off is optimal.

Now, in many cases it's unwise to run exactly at optimal leverage; or full Kelly, because we don't know exactly what the Sharpe or skew are in practice. But generally speaking if something has a higher CAGR at it's optimal leverage, then that will be a safer strategy to run at a lower leverage since there is a lower risk of ruin. It will generally be true that for a given level of risk (standard deviation), you will want the strategy with the best CAGR, which will usually be the strategy with the highest optimal CAGR.

So the metric of maximum CAGR at optimal leverage is generally useful.

Optimal leverage and CAGR for vol targeting

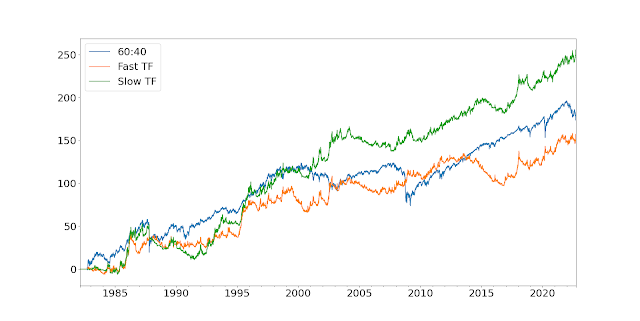

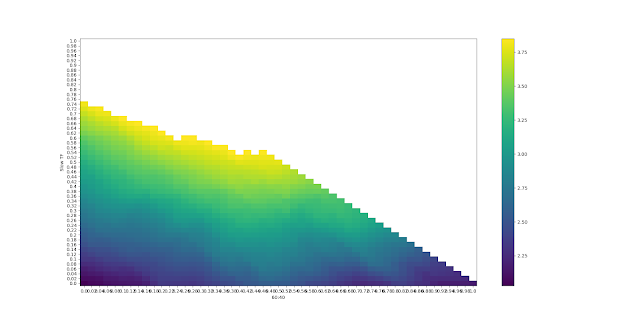

Back to the empirical results. Let's consider then how CAGR changes for the examples we are considering. Each of the following plots shows the CAGR (Y axis) for different styles of strategy (coloured lines) at different levels of relative leverage (X-axis), where relative leverage 1 is the original strategy.

Starting with EWMAC4:

The original relative leverage is 1, where we know that FV is the better option although the other strategies are fairly close - it's a short time period so changing vol doesn't affect things too much. The CAGR improves with more leverage, up to a point. For FV that's at about 3 times the original leverage. The other strategies can take a bit more leverage, mainly because they have lower starting standard deviation.

Incidentally for leverage of 6 the 1E1S strategy has at least one day when it loses 100% or more - hence the CAGR going to zero. A similar thing happens for FV, but until much later. It will happen for the other two strategies, but not in the range of leverage shown.

The important thing here is that the FV strategy has a higher maximum CAGR than say 1E1S; but it's achieved at a lower leverage. We'd still want to use FV to maximise CAGR, even if we had a free hand on choosing leverage.

(The CAGR are pretty similar, and that's because this is a fast trading strategy)

I will quickly show the other forecasts - which show a similar picture - and then do more detailed analysis.

EWMAC8:

EWMAC64:

EWMACX (Everything):

Let's focus on the final plot, which shows the results from running all the different speeds of trend following together (EWMACX). The best leverage (highest CAGR) for FV is roughly around 5x, and for 1E1S around 6x; for 1E1E it's roughly 3x, and for BV approximately 7 times.

Let's quickly remind ourselves of the starting statistics for each strategy, with relative leverage of 1:

FV BV 1E1S 1E1E

stdev 21.5 16.8 13.2 23.1

mean 25.3 19.4 11.6 20.3

costs -1.1 -1.0 -0.4 -0.9

cagr 25.8 19.7 11.3 19.3

OK, so what happens if we apply the optimal relative leverage ratios above? We get (the relative leverage numbers in the column headers):

5xFV 7xBV 6x1E1S 3x1E1E

stdev 107.5 117.6 79.2 69.3

mean 126.5 135.8 69.6 60.9

costs -5.5 -7.0 -2.4 -2.7

cagr 129.0 137.9 67.8 57.9

The vol targeted strategies achieve about double what the non vol targeted achieve.

But, that risk is kind of crazy, so how about if we run it at "Half Kelly", half the optimal leverage figures?

2.5xFV 3.5xBV 3x1E1S 1.5x1E1E

stdev 53.8 58.8 39.6 34.7

mean 63.3 67.9 34.8 30.5

costs -2.8 -3.5 -1.2 -1.4

cagr 64.5 69.0 33.9 29.0

Still not great for non vol targeting. OK....how about if we run everything at a 30% annualised standard deviation? That's pretty much the top end for an institutional fund.

1.4xFV 1.8xBV 2.3x1E1S 1.3x1E1E

stdev 30.0 30.0 30.0 30.0

mean 35.3 34.6 26.4 26.4

costs -1.5 -1.8 -0.9 -1.2

cagr 36.0 35.2 25.7 25.1

We still get a CAGR that's about 40% higher with the same annualised standard deviation.

That's a series of straight wins for vol targeting, regardless of the relative leverage or resulting level of risk.

In fact, from the graph above, the only time when at least one of the non vol targeted strategies (1E1S) beats one of the vol targeted strategies (FV) is for a very high relative leverage, much higher than any sensible person would run at. To put it another way, we need an awful lot of risk before the less positive skew of FV becomes enough of a drag to overcome the Sharpe ratio advantage it has.

Finally, I know a lot of people will be interested in the ratio CAGR/maximum drawdown. So I quickly plotted that up as well. It tells the same story:

Conclusions

The backtest evidence shows that you can achieve a higher maximum CAGR with vol targeting, because it has a large Sharpe Ratio advantage that is only partly offset by it's small skew disadvantage. For lower levels of relative leverage, at more sensible risk targets, vol targeting still has a substantially higher CAGR. The slightly worse skew of vol targeting does not become problematic enough to overcome the SR advantage, except at extremely high levels of risk; well beyond what any sensible person would run.

Now it's very hard to win these kinds of arguments because you run up against the inevitable straw man system effect. Whatever results I show here you can argue wouldn't hold with the particular system that you, as a purer trend follower reading this post, happen to be running. The other issue you could argue about is whether any back test is to be trusted; even one like this with 100 instruments and up to 50 years of data, and no fitting.

(At least any implicit fitting, in choosing the set of opening rules used, affects all the strategies to the same degree).

However, we can make the following general observations, irrespective of the backtest results.

It's certainly true and a fair criticism that evaluating strategies purely on Sharpe Ratio means that you will end up favouring negative skew strategies with low standard deviation, because it looks like they can be leveraged up to hit a higher CAGR. But they can't, because they will blow up! Equity market neutral, option selling, fixed income RV, and EM FX carry on quasi pegged currencies .... all fit into this category.

A lower Sharpe Ratio trend following type strategy; with positive skew and usually higher standard deviation, is actually a better bets. You can leverage them up a little more, if you wish, to achieve a higher maximum CAGR. But even at sensible risk target levels they will have a higher CAGR than the strategies with blow up risk.

But that's not what we have here! We have an alternative strategy (vol targeting) which has a significant Sharpe Ratio advantage, but which still has positive skew, just not quite as good as non vol targeting.

For my backtested results above to be wrong, it must be true that eithier

(i) vol targeting has some skeletons in the form of much nastier skew than the backtest suggests,

(ii) the true positive skew for non vol targeting is much higher than in the backtest,

or that (ii) the Sharpe ratio advantage of vol targeting is massively overstated,

or the costs of vol targeting are substantially higher than in the backest.

The first point is a valid criticism of option selling or EM FX carry type strategies with insufficient back test data (the 'peso problem'), the archetype of negative skew, but I do not think it is likely that trend following vol targeting suffers from this backtesting bias. Ultimately we cut positions that move against us; in nearly all cases quicker than they are cut by non vol targeting. Of course this curtails right tail outliers, but it also means we are extremely unlikely to see large left tail outliers.

Nor do I think that it is plausible that there is undiscovered additional positive skew that isn't present in the non vol targeted strategies. Even if there was, we'd need an astonishing amount of additional skew to overcome the Sharpe Ratio disadvantage at sensible levels of risk.

I also think it's highly plausible that vol targeting has a Sharpe Ratio advantage; it strives for more consistent expected risk (measured by standard deviation), so it's unsurprising it does better based on this metric. I've never met anyone who thinks that vol targeting has a lower SR than non vol targeting - all the traditional trend followers I know use this as a reason for pooh-poohing the Sharpe Ratio as a performance measure! Finally the costs of vol targeting would need to be EIGHT TIMES HIGHER than in the backtest for it to be suboptimal. Again this seems unlikely - and I've consistently achieved my backtested costs year after year with my vol targeted system.

In conclusion then:

It is perfectly valid to express a preference for positive skew above all else, and to select a non vol targeted strategy on that basis, but to do so because you think you will get a higher terminal wealth (equivalent to a higher maximum CAGR, or higher CAGR at some given level of risk) is incorrect and not supported by the evidence.

Postscript

I added three very slow rules to address a point made on twitter: EWMAC128, 512; EWMAC 256, 1024; and EWMAC512,2048. For interests sake, the average holding period of these rules is between one and two years.

Quick summary: As we slow down beyond EWMAC64 performance tends to degrade on all metrics regardless of whether you are vol targeting or not. This is because most assets tend to mean revert at that point. Not shown here, but the Beta of your strategies also increases - you get more correlated to long only portfolios, because historically at least most assets have gone up; and your alpha reduces.

The SR advantage of vol targeting improves, but the skew shows a mixed picture; overall at 'natural' leverage the CAGR of vol targeting improves further as we slow down. However there is a huge difference between 1E1E and 1E1S, the latter ends up with negative skew and a negative CAGR; similarly BV looks better than FV as we slow down.

The maximum optimal CAGR is higher for vol targeting on these slower systems, and the advantage increases as we slow down.

I would also add a huge note of caution: with such slow trading systems, even with 100+ instruments and up to 50 years of data the number of unique data points is relatively small, so the results are unlikely to be statistically significant - which is why I usually never go this slow for my back tests.

Standard deviation

FV BV 1E1S 1E1E

ewmac128 14.8 16.3 14.2 34.5

ewmac256 17.2 16.1 16.0 37.4

ewmac512 18.0 15.9 16.0 42.2

Mean

FV BV 1E1S 1E1E

ewmac128 11.5 12.8 9.0 13.2

ewmac256 10.3 10.1 6.9 10.5

ewmac512 8.5 9.2 4.8 7.6

Sharpe Ratio

FV BV 1E1S 1E1E

ewmac128 0.78 0.78 0.63 0.38

ewmac256 0.59 0.63 0.43 0.28

ewmac512 0.47 0.58 0.30 0.18

Skew

FV BV 1E1S 1E1E

ewmac128 0.48 0.39 2.80 -2.35

ewmac256 0.11 0.36 3.81 -2.55

ewmac512 -0.27 0.29 2.13 -4.11

Lower tail ratio

FV BV 1E1S 1E1E

ewmac128 1.90 1.51 1.81 3.46

ewmac256 1.72 1.48 1.89 3.45

ewmac512 1.60 1.48 2.11 3.71

CAGR

FV BV 1E1S 1E1E

ewmac128 11.02 12.10 8.32 7.43

ewmac256 9.18 9.22 5.76 3.46

ewmac512 7.12 8.24 3.61 -1.43

Results of applying leverage: EWMAC128

EWMAC256

EWMAC512

.jpg)