* It was me. "Systematic Trading" page 40

Skew then is an important concept, and one which I find myself thinking about a lot. So I've decided to write a series of posts about skew, of which is the first.

In fact I've already written a substantive post on trend following and skew, so this post is sort of the prequel to that. This then is actually the second post in the series, but really it's the first, because you should read this one first. Don't tell me you're confused, I know for a fact everyone reading this is fine with the fact that the Star Wars films came out in the order 4,5,6,1,2,3,7,8,9.

In this post I'll talk about two things. Firstly I will (briefly) discuss the difficulties of measuring skew: yes it's my old favourite subject sampling variation. Secondly I'll talk (at great length) about how skew can predict expected returns by answering the following questions:

- Do futures with negative skew generally have higher returns than those with positive skew? (strategic allocation)

- Does this effect still hold when we adjust returns for risk (using standard deviations only)? (risk adjusted returns)

- Are these rewards because we're taking on more risk in the form of

- Does an asset that currently has negative skew outperform one that has positive skew? (time series and forecasting)

- Does an asset with lower skew than normal perform better than average (normalised time series)?

- Do these effects hold within asset classes? (relative value)

Some of these are well known results, others might be novel (I haven't checked - this isn't an academic paper!). In particular, this is the canonical paper on skew for futures: but it focuses on commodity futures. There has also been research in single equities that might be relevant (or it may not "Aggregate stock market returns display negative skewness. Firm-level stock returns display positive skewness." from here).

This is the sort of 'pre-backtest' analysis that you should do with a new trading strategy idea, to get a feel for it. What we need to be wary of is implicit fitting; deciding to pursue certain variations and not others having seen the entire sample. I will touch on this again later.

I will assume you're already familiar with the basics of skew, if you're not then you can (i) read "Systematic Trading" (again), (ii) read the first part of this post, or (iii) use the magical power of the internet, or if you're desperate (iv) read a book on statistics.

Variance in skew sample estimates

Really quick reminder: The variance in a sample estimate tells us how confident we can be in a particular estimate of some property. The degree of confidence depends on how much data we have (more data: more confident), the amount of variability in the data (e.g. for sample means, less volatility: more confident), and the estimator we are using (estimates of standard deviation: low variation)

We can estimate sampling distributions in two ways: using closed form formulae, or using a monte carlo estimator.

Closed form formulae are available for things like mean, standard deviation, Sharpe ratio and correlation estimates; but they usually assume i.i.d. returns (Gaussian and serially uncorrelated). For example, the formula for the variance of the mean estimate is sigma / N^2, where sigma is the sample variance estimate and N is the number of data points.

What is the closed form formula for skew? Well, assuming Gaussian returns the formula is as follows:

Skew = 0

Obviously that isn't much use! To get a closed form we'd need to assume our returns had some other distribution. And the closed forms tend to be pretty horific, and also the distributions aren't normally much use if there are outliers (something which, as we shall see, has a fair old effect on the variance). So let's stick to using monte carlo.

Obviously to do that, we're going to need some data. Let's turn to my sadly neglected open source project, pysystemtrade.

import numpy as np

from systems.provided.futures_chapter15.estimatedsystem import *

system = futures_system()

del(system.config.instruments) # so we can get results for everything

instrument_codes = system.get_instrument_list()

percentage_returns = dict()

for code in instrument_codes:

denom_price = system.rawdata.daily_denominator_price(code)

instr_prices = system.rawdata.get_daily_prices(code)

num_returns = instr_prices.diff()

perc_returns = num_returns / denom_price.ffill()

# there are some false outliers in the data, let's remove them

vol_norm_returns = system.rawdata.norm_returns(code)

perc_returns[abs(vol_norm_returns)>10]=np.nan

percentage_returns[code] = perc_returns

We'll use this data throughout the rest of the post; if you want to analyse your own data then feel free to substitute it in here.

Pandas has a way of measuring skew:

percentage_returns["VIX"].skew()

0.32896199946754984

We're ignoring for now the question of whether we should use daily, weekly or whatever returns to define skew.

However this doesn't capture the uncertainty in this estimate. Let's write a quick function to get that information:

import random

def resampled_skew_estimator(data, monte_carlo_count=500): """ Get a distribution of skew estimates :param data: some time series :param monte_carlo_count: number of goes we monte carlo for :return: list """ skew_estimate_distribution = [] for _notUsed in range(monte_carlo_count): resample_index = [int(random.uniform(0,len(data))) for _alsoNotUsed in range(len(data))] resampled_data = data[resample_index] sample_skew_estimate = resampled_data.skew() skew_estimate_distribution.append(sample_skew_estimate) return skew_estimate_distribution

Now I can plot the distribution of the skew estimate for an arbitrary market:

import matplotlib.pyplot as pyplot

data = percentage_returns['VIX']

x=resampled_skew_estimator(data, 1000)

pyplot.hist(x, bins=30)

Boy... that is quite a range. It's plausible that the skew of VIX (one of the most positively skewed assets in my dataset) could be zero. It's equally possible it could be around 0.6. Clearly we should be quite careful about interpreting small differences in skew as anything significant.

Let's look at the distribution across all of our different futures instruments

# do a boxplot for everything

import pandas as pd

df_skew_distribution = dict()

for code in instrument_codes:

print(code)

x = resampled_skew_estimator(percentage_returns[code],1000)

y = pd.Series(x)

df_skew_distribution[code]=y

df_skew_distribution = pd.DataFrame(df_skew_distribution)

df_skew_distribution = df_skew_distribution.reindex(df_skew_distribution.mean().sort_values().index, axis=1)

df_skew_distribution.boxplot()

pyplot.xticks(rotation=90)

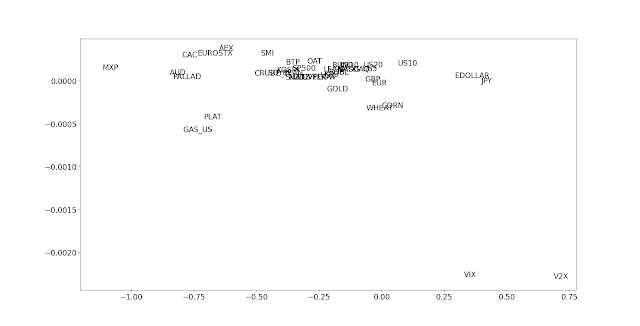

It looks like:

- Most assets are negatively skewed

- Positively skewed assets are kind of logical: V2X, VIX (vol), JPY (safe haven currency), US 10 year, Eurodollar (bank accounts backed by the US government).

- The most negatively skewed assets include stock markets and carry currencies (like MXP, AUD) but also some commodities

- Several pinches of salt should be used here as very few assets have statistically significant skew in eithier direction.

- More negatively and positively skewed assets have wider confidence intervals

- Positive skewed assets have a postively skewed estimate for skew; and vice versa for negative skew

- There are some particularly fat tailed assets whose confidence intervals are especially wide: Corn, V2X, Eurodollar, US 2 year.

Bear in mind that not all instruments have the same length of data, and in particular many do not include 2008.

Do assets with negative skew generally have higher returns than those with positive skew?

Let's find out.

# average return vs skew

avg_returns = [percentage_returns[code].mean() for code in instrument_codes]

skew_list = [percentage_returns[code].skew() for code in instrument_codes]

fig, ax = pyplot.subplots()

ax.scatter(skew_list, avg_returns, marker="")

for i, txt in enumerate(instrument_codes):

ax.annotate(txt, (skew_list[i], avg_returns[i]))

Ignoring the two vol markets, it looks like there might be a weak relationship there. But there is huge uncertainty in return estimates. Let's bootstrap the distribution of mean estimates, and plot them with the most negative skew on the left and the most positive skew on the right:

def resampled_mean_estimator(data, monte_carlo_count=500):

""" Get a distribution of mean estimates

:param data: some time series :param monte_carlo_count: number of goes we monte carlo for :return: list """

mean_estimate_distribution = []

for _notUsed in range(monte_carlo_count):

resample_index = [int(random.uniform(0, len(data))) for _alsoNotUsed in range(len(data))]

resampled_data = data[resample_index]

sample_mean_estimate = resampled_data.mean()

mean_estimate_distribution.append(sample_mean_estimate)

return mean_estimate_distribution

df_mean_distribution = dict()

for code in instrument_codes:

print(code)

x = resampled_mean_estimator(percentage_returns[code],1000)

y = pd.Series(x)

df_mean_distribution[code]=y

df_mean_distribution = pd.DataFrame(df_mean_distribution)

df_mean_distribution = df_mean_distribution[df_skew_distribution.columns]

df_mean_distribution.boxplot()

pyplot.xticks(rotation=90)

Again, apart from the vol, hard to see much there. Let's lump together all the countries with above average skew (high skew), and those with below average (low skew):

skew_by_code = df_skew_distribution.mean()

avg_skew = np.mean(skew_by_code.values)

low_skew_codes = list(skew_by_code[skew_by_code<avg_skew].index)

high_skew_codes = list(skew_by_code[skew_by_code>=avg_skew].index)

def resampled_mean_estimator_multiple_codes(percentage_returns, code_list, monte_carlo_count=500):

"""

:param percentage_returns: dict of returns :param code_list: list of str, a subset of percentage_returns.keys :param monte_carlo_count: how many times :return: list of mean estimtes """

mean_estimate_distribution = []

for _notUsed in range(monte_carlo_count):

# randomly choose a code code = code_list[int(random.uniform(0, len(code_list)))]

data = percentage_returns[code]

resample_index = [int(random.uniform(0,len(data))) for _alsoNotUsed in range(len(data))]

resampled_data = data[resample_index]

sample_mean_estimate = resampled_data.mean()

mean_estimate_distribution.append(sample_mean_estimate)

return mean_estimate_distribution

df_mean_distribution_multiple = dict()

df_mean_distribution_multiple['High skew'] = resampled_mean_estimator_multiple_codes(percentage_returns,high_skew_codes,1000)

df_mean_distribution_multiple['Low skew'] = resampled_mean_estimator_multiple_codes(percentage_returns,low_skew_codes,1000)

df_mean_distribution_multiple = pd.DataFrame(df_mean_distribution_multiple)

df_mean_distribution_multiple.boxplot()

Incidentally I've truncated the plots here because there is a huge tail of negative returns for high skew: basically the vol markets. The mean and medians are instructive, multiplied by 250 to annualise the mean return is -6.6% for high skew and +1.8% for low skew. Without that long tail having such an impact the medians are much closer: +0.9% for high skew and +2.2% for low skew.

If I take out the vol markets I get means of 0.6% and 1.7%, and medians of 1.2% and 2.3%. The median is unaffacted, but the ridiculously low mean return for high vol markets is taken out.

So: there is something there, of the order of a 1.0% advantage in extra annual returns for owning markets with lower than average skew. If you're an investor with a high tolerance for risk who can't use leverage; well you can stop reading now.

Does this effect still hold when we adjust returns for risk (using standard deviations only)?

Excellent question. Let's find out.

# sharpe ratio vs skew

sharpe_ratios = [16.0*percentage_returns[code].mean()/percentage_returns[code].std() for code in instrument_codes]

skew_list = [percentage_returns[code].skew() for code in instrument_codes]

fig, ax = pyplot.subplots()

ax.scatter(skew_list, sharpe_ratios, marker="")

for i, txt in enumerate(instrument_codes):

ax.annotate(txt, (skew_list[i], sharpe_ratios[i]))

Hard to see any relationship here, although the two vol markets still stand out as outliers.

Let's skip straight to the high skew/low skew plot, this time for Sharpe Ratios:

def resampled_SR_estimator_multiple_codes(percentage_returns, code_list, monte_carlo_count=500, avoiding_vol=False):

"""

:param percentage_returns: dict of returns :param code_list: list of str, a subset of percentage_returns.keys :param monte_carlo_count: how many times :return: list of SR estimtes """

SR_estimate_distribution = []

for _notUsed in range(monte_carlo_count):

# randomly choose a code # comment in these lines to avoid vol if avoiding_vol:

code = "VIX" while code in ["VIX", "V2X"]:

code = code_list[int(random.uniform(0, len(code_list)))]

else:

code = code_list[int(random.uniform(0, len(code_list)))]

data = percentage_returns[code]

resample_index = [int(random.uniform(0,len(data))) for _alsoNotUsed in range(len(data))]

resampled_data = data[resample_index]

SR_estimate = 16.0*resampled_data.mean()/resampled_data.std()

SR_estimate_distribution.append(SR_estimate)

return SR_estimate_distribution

df_SR_distribution_multiple = dict()

df_SR_distribution_multiple['High skew'] = resampled_SR_estimator_multiple_codes(percentage_returns,high_skew_codes,1000)

df_SR_distribution_multiple['Low skew'] = resampled_SR_estimator_multiple_codes(percentage_returns,low_skew_codes,1000)

df_SR_distribution_multiple = pd.DataFrame(df_SR_distribution_multiple)

df_SR_distribution_multiple.boxplot()

Hard to see what, if any, is the difference there. The summary statistics are even more telling:

Mean: High skew 0.22, Low skew 0.26

Median: High skew 0.22, Low skew 0.20

Once we adjust for risk, or at least risk measured by the second moment of the distribution, then uglier skew (the third moment) doesn't seem to be rewarded by an improved return.

Things get even more interesting if we remove the vol markets again:

Mean: High skew 0.37, Low skew 0.24

Median: High skew 0.29, Low skew 0.17

A complete reversal! Probably not that significant, but a surprising turn of events none the less.

Does an asset that currently has negative skew outperform one that has positive skew? (time series and forecasting)

Average skew and average returns aren't that important or interesting; but it would be cool if we could use the current level of skew to predict risk adjusted returns in the following period.

An open question is, what is the current level of skew? Should we use skew defined over the last week? Last month? Last year? I'm going to check all of these, since I'm a big fan of time diversification for trading signals.

I'm going to get the distribution of risk adjusted returns (no need for bootstrapping) for the following N days, where skew over the previous N days has been higher or lower than average. Then I do a t-test to see if the realised Sharpe Ratio is statistically significantly better in a low skew versus high skew environment.

Large negative numbers mean a bigger difference in performance. It looks like we get significant results measuring skew over at least the last 1 month or so.

How much is this worth to us? Here are the conditional median returns:

Easy to check:

With a less symettric split we'd normally to get better statistical significance (since the 'high skew' group is a bit smaller now), however the results are almost tthe same. Personally I'm going to stick to using the median as my cutoff, since it will make my trading system more symettric.

all_SR_list = []

all_tstats=[]

all_frequencies = ["7D", "14D", "1M", "3M", "6M", "12M"]

for freqtouse in all_frequencies:

all_results = []

for instrument in instrument_codes:

# we're going to do rolling returns perc_returns = percentage_returns[instrument]

start_date = perc_returns.index[0]

end_date = perc_returns.index[-1]

periodstarts = list(pd.date_range(start_date, end_date, freq=freqtouse)) + [

end_date]

for periodidx in range(len(periodstarts) - 2):

# avoid snooping p_start = periodstarts[periodidx]+pd.DateOffset(-1)

p_end = periodstarts[periodidx+1]+pd.DateOffset(-1)

s_start = periodstarts[periodidx+1]

s_end = periodstarts[periodidx+2]

period_skew = perc_returns[p_start:p_end].skew()

subsequent_return = perc_returns[s_start:s_end].mean()

subsequent_vol = perc_returns[s_start:s_end].std()

subsequent_SR = 16*(subsequent_return / subsequent_vol)

if np.isnan(subsequent_SR) or np.isnan(period_skew):

continue else:

all_results.append([period_skew, subsequent_SR])

all_results=pd.DataFrame(all_results, columns=['x', 'y'])

avg_skew=all_results.x.median()

all_results[all_results.x>avg_skew].y.median()

all_results[all_results.x<avg_skew].y.median()

subsequent_sr_distribution = dict()

subsequent_sr_distribution['High_skew'] = all_results[all_results.x>=avg_skew].y

subsequent_sr_distribution['Low_skew'] = all_results[all_results.x<avg_skew].y

subsequent_sr_distribution = pd.DataFrame(subsequent_sr_distribution)

med_SR =subsequent_sr_distribution.median()

tstat = stats.ttest_ind(subsequent_sr_distribution.High_skew, subsequent_sr_distribution.Low_skew, nan_policy="omit").statistic

all_SR_list.append(med_SR)

all_tstats.append(tstat)

all_tstats = pd.Series(all_tstats, index=all_frequencies)

all_tstats.plot()

Here are the T-statistics:Large negative numbers mean a bigger difference in performance. It looks like we get significant results measuring skew over at least the last 1 month or so.

How much is this worth to us? Here are the conditional median returns:

all_SR_list = pd.DataFrame(all_SR_list, index=all_frequencies)

all_SR_list.plot()

Nice. An extra 0.1 to 0.4 Sharpe Ratio units. One staggering thing about this graph is this, when measuring skew over the last 3 to 12 months, assets with higher than average skew make basically no money.Should we use 'lower than average skew' or 'negative skew' as our cutoff / demeaning point?

Up to now we've been using the median skew as our cutoff (which in a contionous trading system would be our demeaning point, i.e. we'd have positive forecasts for skew below the median, and negative forecasts for skew which was above). This cuttoff hasn't quite been zero, since on average more assets have negative skew. But is there something special about using a cutoff of zero?Easy to check:

#avg_skew=all_results.x.median()# instead:

avg_skew = 0

With a less symettric split we'd normally to get better statistical significance (since the 'high skew' group is a bit smaller now), however the results are almost tthe same. Personally I'm going to stick to using the median as my cutoff, since it will make my trading system more symettric.

Does an asset with lower skew than normal perform better than average (normalised time series)?

The results immediately above can be summarised as:

- Assets which currently have more negative skew than average - measured as an average across all assets over all time

This confound three possible effects:

- Assets with on average (over all time) more negative skew perform better on average (the first thing we checked - and on a risk adjusted basis the effect is pretty weak and mostly confined to the vol markets)

- Assets which have currently more negative skew than their own average perform better

- Assets which currently have more negative skew than the current average perform better than other assets

Let's check two and three.

First let's check the second effect, which can be rephrased as is skew demeaned by the average for an asset predictive of future performance for that asset?

I'm going to use the average skew for the last 10 years to demean each asset.

Code the same as above, except:

perc_returns = percentage_returns[instrument]

all_skew = perc_returns.rolling("3650D").skew()

...

period_skew = perc_returns[p_start:p_end].skew()

avg_skew = all_skew[:p_end][-1]

period_skew = period_skew - avg_skew

subsequent_return = perc_returns[s_start:s_end].mean()

The 'skew bonus' has reduced somewhat to around 0.2 SR points for the last 12 months of returns.

Now let's check the third effect, which can be rephrased as is skew demeaned by the average of current skews across all assets asset predictive of future performance for that asset?

Code changes:

all_SR_list = []

all_tstats=[]

all_frequencies = ["7D", "14D", "30D", "90D", "180D", "365D"]

...for freqtouse in all_frequencies:

all_results = []

# relative value skews need averaged

skew_df = {}

for instrument in instrument_codes:

# rolling skew over period instrument_skew = percentage_returns[instrument].rolling(freqtouse).skew()

skew_df[instrument] = instrument_skew

skew_df_all = pd.DataFrame(skew_df)

skew_df_median = skew_df_all.median(axis=1)

for instrument in instrument_codes:

....

period_skew = perc_returns[p_start:p_end].skew() avg_skew = skew_df_median[:p_end][-1] period_skew = period_skew - avg_skew subsequent_return = perc_returns[s_start:s_end].mean()...

Plots, as before:

To summarise then:

- Using recent skew is very predictive of future returns, if 'recent' means using at least 1 month of returns, and ideally more. The effect is strongest if we use the last 6 months or so of returns.

- Some, but not all, of this effect persists if we normalise skew by the long run average for an asset. So, for example, even for assets which generally have positive skew, you're better off investing in them when their skew is lower than normal

- Some, but not all, of this effect persists if we normalise skew by the current average level of skew. So, for example, even in times when skew generally is negative (2008 anyone?) it's better to invest in the assets with the most negative skew.

We could formally decompose the above effects with for example a regression, but I'm more of a fan of using simple trading singles which are linearly weighted, with weights conditional on correlations between signals.

Do these effects hold within asset classes? (relative value)

Rather than normalising skew by the current average across all assets, maybe it would be better to consider the average for that asset class. So we'd be comparing S&500 current skew with Eurostoxx, VIX with V2X, and so on.

all_SR_list = []

all_tstats=[]

all_frequencies = ["7D", "14D", "30D", "90D", "180D", "365D"]

asset_classes = list(system.data.get_instrument_asset_classes().unique())

for freqtouse in all_frequencies:

all_results = []

# relative value skews need averaged

skew_df_median_by_asset_class = {}

for asset in asset_classes:

skew_df = {}

for instrument in system.data.all_instruments_in_asset_class(asset):

# rolling skew over period instrument_skew = percentage_returns[instrument].rolling(freqtouse).skew()

skew_df[instrument] = instrument_skew

skew_df_all = pd.DataFrame(skew_df)

skew_df_median = skew_df_all.median(axis=1)

# will happen if only one asset class skew_df_median[skew_df_median==0] = np.nan

skew_df_median_by_asset_class[asset] = skew_df_median

for instrument in instrument_codes:

# we're going to do rolling returns asset_class = system.data.asset_class_for_instrument(instrument)

perc_returns = percentage_returns[instrument]

...

period_skew = perc_returns[p_start:p_end].skew()

avg_skew = skew_df_median_by_asset_class[asset_class][:p_end][-1]

period_skew = period_skew - avg_skew

subsequent_return = perc_returns[s_start:s_end].mean()

That's interesting: the effect is looking a lot weaker except for the longer horizons. The worse t-stats could be explained by the fact that we have less data (long periods when only one asset is in an asset class and we can't calculate this measure), but the relatively small gap between Sharpe Ratios isn't affected by this.

So almost all of the skew effect is happening at the asset class level. Within asset classes, for futures at least, if you normalise skew by asset class level skew you get a not significant 0.1 SR units or so of benefit, and then only for fairly slow time frequencies.

Summary

This has been a long post. And it's been quite a heavy, graph and python laden, post. Let's have a quick recap:

- Most assets have negative skew

- There is quite a lot of sampling uncertainty around skew, which is worse for assets with outliers (high kurtosis) and extreme absolute skew

- Assets which on average have lower (more negative) skew will outperform in the long run.

- This effect is much smaller when we look at risk adjusted returns (Sharpe Ratios), and is driven mainly by the vol markets (VIX, V2X)

- Assets with lower skew right now will outperform those with higher skew right now. This is true for skew measuring and forecasting periods of at least 1 month, and is strongest around the 6 month period. In the latter case an average improvement of 0.4 SR units can be expected.

- This effect is the same regardless of wether skew is compared to the median or compared to zero.

- This effect mostly persists even if we demean skew by the rolling average of skew for a given asset over the last 10 years: time series relative value

- This effect mostly persists if we deman skew by the average of current skews across all assets: cross sectional relative value

- But this effect mostly vanishes if the relative value measure is taken versus the average for the relevant asset class.

This is all very interesting, but it mostly compares, and it still isn't a trading strategy. So in the next post I will consider the implementation of these ideas as a suite of trading strategies:

- Skew measured over the last N days, relative to a long term average across all assets

- Skew measured over the last N days, relative to a long term average for this asset

- Skew measured over the last N days, relative to the current average for all assets

- Skew measured over the last N days, relative to the current average for the relevant asset class

Where N will be in the ballpark 10... 250 business days.

For now it's important to bear in mind that I must not discard any of the above ideas because of likely poor performance:

- lower values of N (though some might be removed because their trading costs are too high), eg 2 weeks

- Asset class relative value

I am sure I speak for many of your followers, Rob, when I say this is another stimulating post (and very welcome after your book writing and building sabbatical :)). Looking forward to the next one!

ReplyDeleteHi Rob,

ReplyDeleteone of the conclusions of this post is "Assets with lower skew right now will outperform those with higher skew right now." I was trying to think what would that look like in terms of the actual price\returns of that asset and what's the general meaning\explanation.

If I understand it correctly, something like that will happen during unexpected negative events for the asset, e.g. when S&P suddenly drops by more than "recent usual" of the other assets, sort of., but then how a rule which buys an asset with a recent negative skew (more negative then other assets) be different than a simple rule which buys assets that "recently dropped in price more than other assets" ? I.e. in the simplest terms "buy S&P500 if it recently dropped a lot" ? I guess measuring recent skew instead of recent returns is different because skew shows not only recent negative returns but recent unusually-large negative returns, is that correct?

Negative skew means you have fewer losses but they are larger in magnitude. So it might be that there has been a run of good performance, with a few really bad days. In contrast if you had a 6 month period of losses, with an occasional really good day, that would be positive skew.

DeleteI see, so a possible "human-level" interpretation of why such a rule might work (if it's appropriate to search for one?) could be "humans tend to overreact to unusually large sudden losses which in reality tend to recover, therefore it could be profitable to buy in such cases"?

DeleteNo not really; the rule doesn't work so well when the skew has been recently negative, so it doesn't seem to be that (you could test this specifically and it may well work, but that isn't what this rule is doing). It's more "this is an asset which has shocking down days, so I need to be paid more to own it"

DeleteHmm, makes sense..

ReplyDeleteThanks!

Thank you, Rob. Would you be willing to do the same kind of analysis for kurtosis?

ReplyDeleteYes - great idea.

DeleteHi Rob,

ReplyDeleteThe paper you linked on skewness in commodities lead me to another paper on hedging pressure in commodities: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1340873

Both papers show strong long-short returns using hedging pressure for commodities futures(SR of ~0.5). I was wondering whether you had considered using hedging pressure data for commodities?

I have looked at COT data in the past and not found anything interesting.

Delete