Optional Christmas themed introduction

Twas the night before Christmas, and all through the house.... OK I can't be bothered. It was quiet, ok? Not a creature was stirring... literally nothing was moving basically. And then a fat guy in a red suit squeezed through the chimney, which is basically breaking and entering, and found a small child waiting for him (I know it sounds dodgy, but let's assume that Santa has been DBS checked*, you would hope so given that he spends the rest of December in close proximity to kids in shopping centres)

* Non british people reading this blog, I could explain this joke to you, but if you care that much you'd probably care enough to google it.

"Ho ho" said the fat dude "Have you been a good boy / girl?"

"Indeed I have" said the child, somewhat precociously if you ask me.

"And what do you want for Christmas? A new bike? A doll? I haven't got any Barbies left, but I do have a Robert Oppenheimer action figure; look if you pull this string in his stomach he says 'Now I am become Death destroyer of worlds', and I'll even throw in a Richard Feynman lego mini-figure complete with his own bongo drums if you want."

"Not for me, thank you. But it has been quite a long time since Rob Carver posted something on his blog. I was hoping you could persuade him to write a new post."

"Er... I've got a copy of his latest book if that helps" said Santa, rummaging around in his sack "Quite a few copies actually. Clearly the publisher was slightly optimistic with the first print run."

"Already got it for my birthday when it came out in April" said the child, rolling their eyes.

"Right OK. Well I will see what I can do. Any particular topic you want him to write about in this blog post?"

"Maybe something about portfolio optimisation and uncertainty? Perhaps some more of that bootstrapping stuff he was big on a while ago. And the Kelly criterion, that would be nice too."

"You don't ask for much, do you" sighed Santa ironically as he wrote down the list of demands.

"There need to be really pretty plots as well." added the child.

"Pretty... plots. Got it. Right I'll be off then. Er.... I don't suppose your parents told you to leave out some nice whisky and a mince pie?"

"No they didn't. But you can have this carrot for Rudolf and a protein shake for yourself. Frankly you're overweight and you shouldn't be drunk if you're piloting a flying sled."

He spoke not a word, but went straight to his work,And filled all the stockings, then turned with a jerk. And laying his finger aside of his nose, And giving a nod, up the chimney he rose! He sprang to his sleigh, to his team gave a whistle, And away they all flew like the down of a thistle. But I heard him exclaim, ‘ere he drove out of sight,

"Not another flipping protein shake..."

|

| https://pixlr.com/image-generator/ prompt: "Father Christmas as a quant trader" |

Brief note on whether it is worth reading this

Somewhat messy python code is available here (with some data here or use your own), and it has no dependency on my open source trading system pysystemtrade so everyone can enjoy it.

Bootstrapping

I am a big fan of bootstrapping. Some definitional stuff before I explain why. Let's consider a couple of different ways to estimate something given some data. Firstly we can use a closed form. If for example we want the average monthly arithmetic return for a portfolio, we can use the very simple formula of adding up the returns and dividing by the number of periods. We get a single number. Although the arithmetic mean doesn't need any assumptions, closed form formula often require some assumptions to be correct - like a Gaussian distribution. And the use of a single point estimate ignores the fact that any statistical estimate is uncertain.

Secondly, we can bootstrap. To do this we sample the data repeatedly to create multiple new sets of data. Assuming we are interested in replicating the original data series, the new set of data would be the same length as the original, and we'd be sampling with replacement (or we'd just get the new data in a different order). So for example, with ten years of daily data (about 2500 observations), we'd choose some random day and get the returns data from that. Then we'd keep doing that, not being bothered about choosing the same day (sampling with replacement), until we had done this 2500 times.

Then from this new set of data we estimate our mean, or do whatever it is we need to do. We then repeat this process, many times. Now instead of a single point estimate of the mean, we have a distribution of possible means, each drawn from a slightly different data series. This requires no assumptions to be made, and automatically tells us what the uncertainty of the parameter estimate is. We can also get a feel for how sensitive our estimate is to different variations on the same history. As we will see, this will also lead us to produce estimates that are more robust to the future being not exactly like the past.

Note: daily sampling destroys any autocorrelation properties in the data, so it wouldn't be appropriate for example for creating new price series when testing momentum strategies. To do this, we'd have to sample larger chunks of time period to retain the autocorrelation properties. For example we might restrict ourselves to sampling entire years of data. For the purposes of this post we don't mind about autocorrelation, so we can sample daily data.

Bootstrapping is particularly potent in the field of financial data because we only have one set of data: history. We can't run experiments to get more data. Bootstrapping allows us to create 'alternative histories' that have the same basic character as our actual history, but aren't quite the same. Apart from generating completely random data (which itself will still require some assumptions - see the following note), there isn't really much else we can do.

Bootstrapping helps us with the quant finance dilemma: we want the future to be like the past so that we can use models calibrated on the past in the future, but the future will never be exactly like the past.

Note: that bootstrapping isn't quite the same as monte carlo. With that we estimate some parameters for the data, making an assumption about it's distribution. Then we randomly sample from that distribution. I'm not a fan of this. We have all the problems of making assumptions about distribution, and of uncertainty about the parameter estimates we use for that distribution.

Portfolio optimisation

- Allocating weights to each asset, where the weights sum to one

- Deciding on the total leverage for the portfolio

- Zero correlation (about what it has been in practice since 1982)

- 2.5% risk free rate (which as in standard finance I assume I can borrow at)

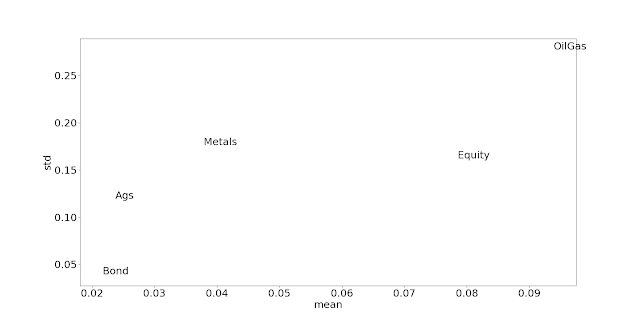

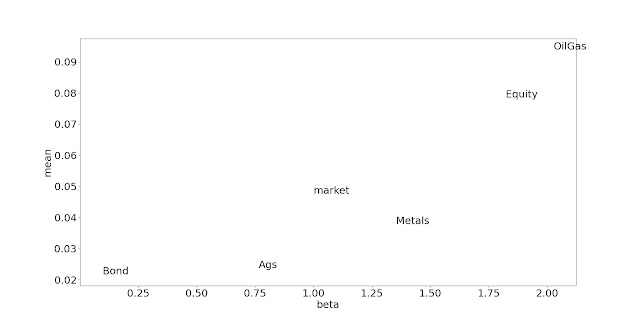

- 3.5% bond returns @ 5% vol

- 5.75% equity returns @ 17% vol

Optimal leverage and Kelly

- Return parameters are Guassian i.i.d. (which financial data famously is not!)

- The return parameters are fixed

- That we have no sampling uncertainty of the return parameters

- We are fine running at fully Kelly, which is a notoriously aggressive amount of leverage

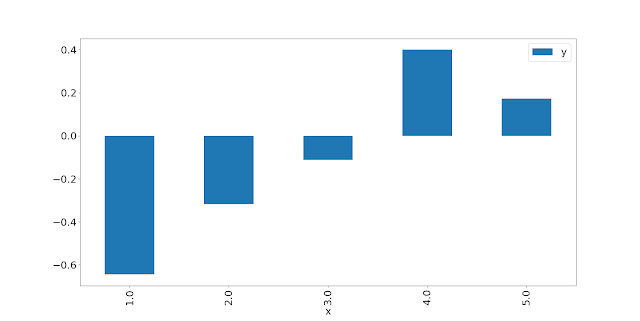

Bootstrapping optimal leverage

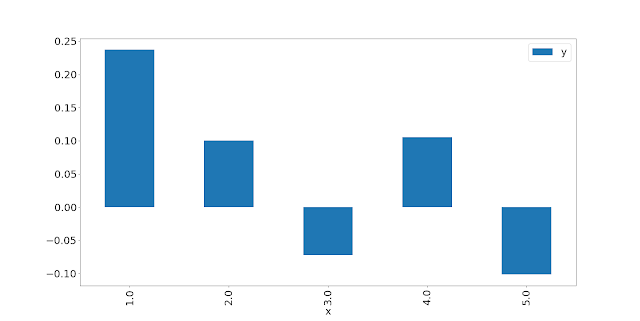

Optimal allocation - mean variance

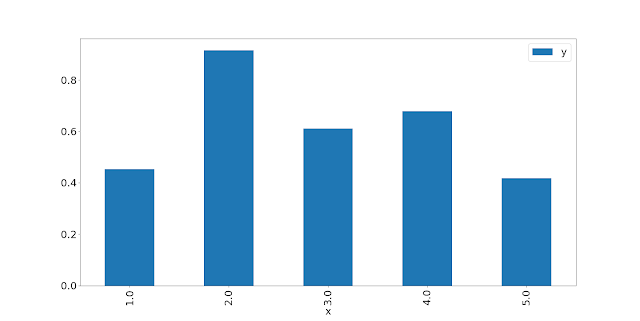

Again, each of these coloured lines represents a different point on the distribution of Sharpe Ratios. The y-axis is the Sharpe Ratio, and the x-axis is the allocation to equities; zero in equities on the far left, and 100% on the far right.

Same procedures as before: first work out your tolerance for uncertainty and hence which line you should be on. Secondly, find the allocation point which maximises Sharpe Ratio. Thirdly, examine the consequences of having a lower or higher allocation - basically how robust is your solution.

For example, for the median tolerance (green line) the best allocation comes in somewhere around 18%. That's a little less than the closed form solution; again this is because we haven't got normally distributed assets here. And there is a reasonably symettric shape to the gradient around this point, although that isn't true for lower risk tolerances.

You may be surprised to see that the maximum allocation is fairly invarient to uncertainty tolerance; if anything there seems to be a slightly lower allocation to equities the more optimistic one becomes (although we'd have to run a much more granular backtest plot to confirm this). Of course this wouldn't be the case if we were measuring arithmetic or even geometric return. But on the assumption of a seperable portfolio weighting problem, the most appropriate statistic is the Sharpe Ratio.

This is good news for Old Skool CAPM enthusiasts! It really doesn't matter what your tolerance for uncertainty is, you should put about 18% of your cash weight - about 43% of your risk weight in equities; at least with the assumption that future returns have the forward looking expectations for means, standard deviations, and correlations I've specified above; and the historic higher moments and co-moments that we've seen for the last 40 years.

Joint allocation

Let's abandon the assumption that we can seperate our the problem, and instead jointly optimise the allocation and leverage. Once again the appropriate statistic will be the geometric return. We can't plot these on a single line graph, since we're optimising over two parameters (allocation to equities, and overall leverage), but what we can do is draw heatmaps; one for each point on the return distribution.

Here is the median:

The x-axis is the leverage; lowest on the left, highest on the right. The y-axis is the allocation to equities; 0% on the top, 100% on the bottom. And the heat colour on the z-axis shows the geometric return. Dark blue is very good. Dark red is very bad. The red circle shows the highest dark blue optimum point. It's 30% in equities with 4.5 times leverage: 5.8% geometric return.

But the next question we should be asking is about robustness. An awful lot of this plot is dark blue, so let's start by removing everything below 3% so we can see the optimal region more clearly:

|

You can now see that there is still quite a big area with a geometric return over 5%. It's also clear from the fact there is variation of colour within adjacent points that the bootstrapped samples are still producing enough randomness to make it unclear exactly where the optimium is; and this also means if we were to do some statistical testing we'd be unable to distinguish between the points that are whiteish or dark blue.

In any case when we are unsure of the exact set of parameters to use, we should use a blend of them. There is a nice visual way of doing this. First of all, select the region you think the optimal parameters come from. In this case it would be the banana shaped region, with the bottom left tip of the banana somewhere around 2.5x leverage, 50% allocation to equities; and the top right tip around 6.5x leverage, 15% allocation. And then you want to choose a point which is safely within this shape, but further from steep 'drops' to much lower geometric returns which means in this case you'd be drawn to the top edge of the banana. This is analogous to avoiding the steep drop when you apply too much leverage in the 'optimal leverage' problem.

I would argue that something around the 20% point in equities, leverage 3.0 is probably pretty good. This is pretty close to a 50% risk weight in equities, and the resulting expected standard deviation of 15.75% is a little under equities. In practice if you're going to use leverage you really should adjust your position size according to current risk, or you'd get badly burned if (when) bond vol or equity vol rises.

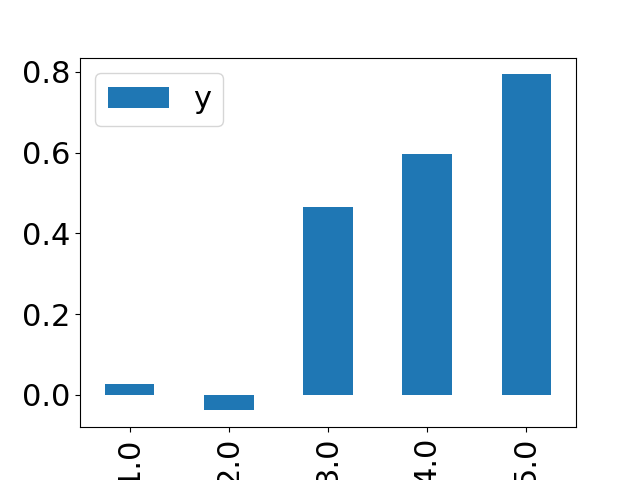

Let's look at another point on the distribution, just to get some intuition. Here is the 25% percentile point, again with lower returns taken out to better intuition:

Conclusion

Of course the point here isn't to advocate a specific blend of bonds and equities; the results here depend to some extent on the forward looking assumptions that I've made. But I do hope it has given you some insight into how bootstrapping can give us much more robust outcomes plus some great intuition about how uncertainty tolerance can be used as a replacement for the more abstract risk tolerance.

Now go back to bed before your parents wake up!

Of course the point here isn't to advocate a specific blend of bonds and equities; the results here depend to some extent on the forward looking assumptions that I've made. But I do hope it has given you some insight into how bootstrapping can give us much more robust outcomes plus some great intuition about how uncertainty tolerance can be used as a replacement for the more abstract risk tolerance.

Now go back to bed before your parents wake up!