If you don't understand what the heck a forecast scalar is, then you might want to read my book (chapter 7). If you haven't bought it, then you might as well know that the scalar is used to modify a trading rule's forecast so that it has the correct average absolute value, normally 10.

Here are some of my thoughts on the estimation of forecast scalars, with a quick demo of how it's done in the code. I'll be using this "pre-baked system" as our starting point:

from systems.provided.futures_chapter15.estimatedsystem import futures_system

system=futures_system()

The code I use for plots etc in this post is in this file.

Even if you're not using my code it's probably worth reading this post as it gives you some idea of the real "craft" of creating trading systems, and some of the issues you have to consider.

Targeting average absolute value

The basic idea is that we're going to take the natural, raw, forecast from a trading rule variation and look at it's absolute value. We're then going to take an average of that value. Finally we work out the scalar that would give us the desired average (usually 10).

Notice that for non symmetric forecasts this will give a different answer to measuring the standard deviation, since this will take into account the average forecast. Suppose you had a long biased forecast that varied between +0 and +20, averaging +10. The average absolute value will be about 10, but the standard deviation will probably be closer to 5.

Neither approach is "right" but you should be aware of the difference (or better still avoid using biased forecasts).

Use a median, not a mean

From above: "We're then going to take an average of that value..."

Now when I say average I could mean the mean. Or the median. (Or the mode but that's just silly). I prefer the median, because it's more robust to having occasional outlier values for forecasts (normally for a forecast normalised by standard deviation, when we get a really low figure for the normalisation).

The default function I've coded up uses a rolling median (syscore.algos.forecast_scalar_pooled). Feel free to write your own and use that instead:

system.config.forecast_scalar_estimate.func="syscore.algos.yesMumIwroteMyOwnFunction"

Pool data across instruments

In chapter 7 of my book I state that good forecasts should be consistent across multiple instruments, by using normalisation techniques so that a forecast of +20 means the same thing (strong buy) for both S&P500 and Eurodollar.

One implication of this is that the forecast scalar should also be identical for all instruments. And one implication of that is that we can pool data across multiple markets to measure the right scalar. This is what the code defaults to doing.

Of course to do that we should be confident that the forecast scalar ought to be the same for all markets. This should be true for a fast trend following rule like this:

## don't pool

system.config.forecast_scalar_estimate['pool_instruments']=False

results=[]

for instrument_code in system.get_instrument_list():

results.append(round(float(system.forecastScaleCap.get_forecast_scalar(instrument_code, "ewmac2_8").tail(1).values),2))

print(results)

[13.13, 13.13, 13.29, 12.76, 12.23, 13.31]

Close enough to pool, I would say. For something like carry you might get a slightly different result even when the rule is properly scaled; it's a slower signal so instruments with short history will (plus some instruments just persistently have more carry than others - that's why the rule works).

results=[]

for instrument_code in system.get_instrument_list():

results.append(round(float(system.forecastScaleCap.get_forecast_scalar(instrument_code, "carry").tail(1).values),2))

print(results)

[10.3, 58.52, 11.26, 23.91, 21.79, 18.81]

The odd one's out are V2X (with a very low scalar) and Eurostoxx (very high) - both have only a year and a half of data - not really enough to be sure of the scalar value.

One more important thing, the default function takes a cross sectional median of absolute values first, and then takes a time series average of that. The reason I do it that way round, rather than time series first, is otherwise when new instruments move into the average they'll make the scalar estimate jump horribly.

Finally if you're some kind of weirdo (who has stupidly designed an instrument specific trading rule), then this is how you'd estimate everything individually:

## don't pool

system.config.forecast_scalar_estimate.pool_instruments=False

## need a different function

system.config.forecast_scalar_estimate.func="syscore.algos.forecast_scalar"

Use an expanding window

As well as being consistent across instruments, good forecasts should be consistent over time. Sure it's likely that forecasts can remain low for several months, or even a couple of years if they're slower trading rules, but a forecast scalar shouldn't average +10 in the 1980's, +20 in the 1990's, and +5 in the 2000's.

For this reason I don't advocate using a moving window to average out my estimate of average forecast values; better to use all the data we have with an expanding window.

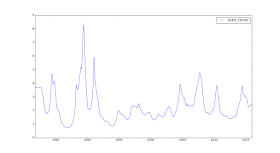

For example, here's my estimate of the scalar for a slow trend following rule, using a moving window of one year. Notice the natural peaks and troughs as we get periods with strong trends (like 2008, 2011 and 2015), and periods without them.

There's certainly no evidence that we should be using a varying scalar for different decades (though things are a little crazier in the early part of the data, perhaps because we have fewer markets contributing). The shorter estimate just adds noise, and will be a source of additional trading in our system.

If you insist on using a moving window, here's how:

## By default we use an expanding window by making this parameter *large* eg 1000 years of daily data

## Here's how I'd use a four year window (4 years * 250 business days)

system.config.forecast_scalar_estimate.window=1000

Goldilocks amount of minimum data - not too much, not too little

The average value of a forecast will vary over the "cycle" of the forecast. This also means that estimating the average absolute value over a short period may well give you the wrong answer.

For example suppose you're using a very slow trend following signal looking for 6 month trends, and you use just a month of data to find the initial estimate of your scalar. You might be in a period of a really strong trend, and get an unrealistically high value for the average absolute forecast, and thus a scalar that is biased downwards.

Check out this, the raw forecast for a very slow trend system on Eurodollars:

Notice how for the first year or so there is a very weak signal. If I'm now crazy enough to use a 50 day minimum, and fit without pooling, we get the following estimate for the forecast scalar:

Not nice. The weak signal has translated into a massive overestimate of the scalar.

On the other hand, using a very long minimum window means we'll eithier have to burn a lot of data, or effectively be fitting in sample for much of the time (depending on whether we backfill - see next section).

The default is two years, which feels about right to me, but you can easily change it, eg to one year:

## use a year (250 trading days)

system.config.forecast_scalar_estimate.min_periods=250

Cheat, a bit

So if we're using 2 years of minimum data, then what do we do if we have less than 2 years? It isn't so bad if we're pooling, since we can use another instrument's data before we get our own, but what if this is the first instrument we're trading. Do we really want to burn our first two years of precious data?

I think it's okay to cheat here, and backfill the first valid value of a forecast scalar. We're not optimising for maximum performance here, so this doesn't feel like a forward looking backtest.

Just be aware that if you're using a really long minimum window then you're effectively fitting in sample during the period that is backfilled.

Naturally if you disagree, you can always change the parameter:

system.config.forecast_scalar_estimate.backfill=False

Conclusion

Perhaps the most interesting thing about this post is how careful and thoughtful we have to be about something as mundane as estimating a forecast scalar.

And if you think this is bad, some of the issues I've discussed here were the subject of debates lasting for years when I worked for AHL!

But being a successful systematic trader is mostly about getting a series of mundane things right. It's not usually about discovering some secret trading rule that nobody else has thought of. If you can do that, great, but you'll also need to ensure all the boring stuff is right as well.

Hi Rob,

ReplyDeleteFor variations within a trading rule ie differing lookbaks for the EWMAC are you calculating a diversification multiplier between variations? If you are then is it using a bootstrapping method or are you just looking at the entire history?

I'm piecing together how it's being done from your code but so far my attempts have produced a very slow version of code.

I don't do it this way, no. I throw all my trading rules into a single bit pot, and on that work out FDM and weights.

DeleteThere is an alternative which is to group rules together as you suggest, work out weights and an FDM for the group; and then repeat the same exercise across groups. However it isn't something I intend to code up.

Dear Rob,

ReplyDeleteI've been playing with the sytem and estimated forecasts for ES EWMAC(18,36) rule from 2010 to 2016 using both MA and EWMA volatility approach.

Scaled ewma forecast looks fine, but scaled MA forecast is biased upwards oscilating around 10 with range -20 to 50. I believe this is due to low volatility (uptrend in equity prices and index futures) especialy in the period from 2012 to 2014. Should i just floor the volatility at some lvl when calculating forecast using MA approach? Also, should I annualize stddev when working out the MA forecast?

From your experience, which approach is better - MA or EWMA when calculating forecasts?

I'd never use MA. When you get a large return the MA will pop up. When it drops out of the window the MA pops down. So it's super jumpy, and this affects it's general statistical properties. Fixing the vol at a floor is treating the symptom, not the problem, and will have side affects (the position won't respond to an initial spike in vol when below the floor).

ReplyDeleteNote: The system already includes a vol floor (syscore.algos.robust_vol_calc) which defaults to flooring the vol at the 5% quantile of vol in the last couple of years.

Dear Rob, could I ask for a bit of clarification (it could just be my own made confusion, but I would appreciate your help)

ReplyDeleteIn the book under "Summary for Combining forecasts" you mention that the order is to first combine the raw forecast from all trading variations and then apply the forecast scaler. Whereas above here the forecast scaler is at the trading rule variation level.

My confusion is that 1 - which approach do you really recommend

2 - if the scaler is to be applied on combined forecast , how to assess the absolute average forecast across variations ?

Thank you in advance . Puneet

I think you've misread the book (or it's badly written). The forecast scalar is for each individual trading rule variation.

DeleteRob thank you for the clarification. Book is fantastic. The confusions are of my own making

ReplyDeleteone more for you on Forecast Scalers - When you talk about looking at absolute average value of forecast across instruments, are these forecast raw or volatility adjusted? If the forecasts are not vol. adjusted, the absolute value of the instrument will play a part in the forecast scaler determination ? That would not be right in my opinion. More so if we want a single scaler across instruments. Thanks in advance for your help.

Yes forecasts should always be adjusted so that they are 'scale-less' and consistent across instruments before scaling. This will normally involve volatility adjusting.

DeleteI probably missed it but do you explicitly test that forecast magnitude correlates with forecast accuracy? For example, do higher values of EWMAs result in greater accuracy? I'm assuming there's an observed albeit weak correlation. I haven't yet immersed myself in your book so I may be barking up the wrong tree.

ReplyDeleteI have tested that specific relationship, and yes it happens. If you think about it logically most forecasting rules "should" work that way.

DeleteRob thank you for being so generous with your expertise. I really enjoyed your book and recommended it to several friends who also purchased it.

ReplyDeleteIn your post, you show that the instrument forecast scalars are fairly consistent for the EWMAC trading rule but not for the carry rule. Do you still then pool and use one forecast scalar for all instruments when using the carry rule?

Yes I use a single scalar for all instruments with carry as well.

DeleteDear Rob,

ReplyDeleteSo for pooling, I do following:

1. I generate forecasts for each instrument separatley.

2. I take cross sectional median for forecast absolute values for all the instruments I'm pooling. What if dates i have data for each instrument are inconsistent?

3. I take time average of cross-sectional median found above.

4. I divide 10 into this average to get forecast scalar.

Am I correct on that?

Also, not related to this topic, but rather to trading rules:

What do you think of an idea behind using a negative skew rule such as mean reversion to some average - e.g. take a linear deviaion from some moving average - the further the price from it, the stronger the forecast but with opposite sign. Wouldn't this harm EWMAC trend rule?

Thanks,

Peter

1. yes

Delete2. yes. It just means in earlier periods you will have fewer data points in your median.

3, 4, yes.

"e.g. take a linear deviaion from some moving average - the further the price from it, the stronger the forecast but with opposite sign. Wouldn't this harm EWMAC trend rule? "

It's fine. Something like this would probably work at different time periods to trend following which seems to break down for holding periods of just a few days or for over a year. It would also be uncorrelated which is obviously good. But running it at the same frequency as EWMAC would be daft, as you'd be running the inverse of a trading rule at the same time as the rule itself.

Dear Rob,

ReplyDeleteI'm still struggling with forecast scalars.

1. For example for one of my ewmac rule variations i get forecast scalars ranging from 7 to 20 for different instruments and one weird outlier with monotonically declining scalar from 37 to 20. Do I still pool data to get one forecast scalar for all instruments or not?

2. Also, forecast scalar changes with every new data point, which value do i use to scale forecasts?

for example i get following forecasts nd scalars for 5 dta points

5 2

4 2.5

4 2.5

6 1.7

7 1.25

for a scaled forecast do i just multiply values by line e.g. 5*2, 4*2.5 ... 7*1.25, or use the latest value of the forecast scalar for all my forecast data points e.g. 5*1.25, 4*1.25 ... 7*1.25?

I would love to show a picture, however i have no idea how to attach it to the comments, is it possible?

1. If you are sure you have created a forecast that ought to be consistent across instruments (and ewmac should be) then yes pool. Such wildly different values suggest you have *very* short data history. Personally in this situation I'd use the forecast scalars from my book rather than estimating them.

Delete2. In theory you should use the value of the scalar that was available at that time, otherwise your backtest is forward looking.

I don't think you can attach pictures here. You could try messaging me on twitter, facebook or linkedin.

Hi Rob (and P_Ser)

ReplyDeleteIf you want to share pictures, it can be done via screencast for example. I've used it to ask my question below :-)

For my understanding, is the way of working in the following link correct with what you mean in this article : https://www.screencast.com/t/V9pOP3gwz28

First step : take the median of all instruments for each date (= cross section median I suppose)

Second step : take a normal average (or mean) of all this medians

In the screenshot you can see this worked out for some dummy data.

I also have the idea to calculate the forecast scalers only yearly and then smoothing them out like described in this link : http://qoppac.blogspot.be/2016/01/correlations-weights-multipliers.html

I think it has no added value to calculate the forecast scaler each date like Peter mentioned, do you agree with this ?

Kris

Hopefully it's clear from https://github.com/robcarver17/pysystemtrade/blob/master/syscore/algos.py function forecast_scalar what I do.

Delete# Take CS average first

# we do this before we get the final TS average otherwise get jumps in

# scalar

if xcross.shape[1] == 1:

x = xcross.abs().iloc[:, 0]

else:

x = xcross.ffill().abs().median(axis=1)

# now the TS

avg_abs_value = pd.rolling_mean(x, window=window, min_periods=min_periods)

scaling_factor = target_abs_forecast / avg_abs_value

Hi,

DeleteI've already found the code on github but I must confess that reading other people's code in a language that I don't know is very difficult to me... but that's my problem. So everything I write my own is based on the articles you wrote.

For so far I can read the code, it is the same as what I mean in the Excel-screenshot.

Thanks, Kris

Let's assume that you choose a forecast scalar such that the forecast is distributed as a normal distribution centred at 0 with expected absolute value 10. Since the expected absolute value of a Gaussian is equal to the stddev * sqrt(2/pi), this then seems to imply that the standard deviation of your forecast will be (10/sqrt(2/pi))=12.5.

ReplyDeleteLooking at Chapter 10 of your book, when you calculate what size position to take based on your forecast, it appears that you choose a size assuming that the stddev of the forecast is 10. You use this assumption to ensure that the stddev of your daily USD P&L equals your daily risk target. But since the stddev is actually 12.5 rather than 10, doesn't this mean that you are taking on 25% too much risk?

However, I think the effect is less than this in practice because we're truncating the ends of the forecast Gaussian (at +-20) which will tend to bring the realised stddev down again. Applying the formula from Wikipedia for the stddev of a truncated Gaussian with underlying stddev 12.5, mean 0, and limits +-20, I get that the stddev of the resulting distribution is 9.69, which is almost exactly 10 again.

So, I think this explains why we end up targeting the right risk, despite fudging the difference between the expected absolute value and the stddev.

This does imply that you shouldn't change the limits of the forecast -- increasing the limits from +-20 to +-30 will cause you to take 18% too much risk!

Python code for calculation: https://gist.github.com/anonymous/703537febb3299508a5e0f6702746c83

Yes I used E(abs(forecast)) in the book as it's easier to explain than the expected standard deviation. The other difference, of course, is that if the mean isn't zero (which for slow trend following and carry is very likely) then the standard deviation will be biased downwards compared to abs() although that would be easy to fix by using MAD rather than STDEV.

DeleteBut yes you're right the effect of applying a cap is to bring things down to the right risk again. You can add this to the list of reasons why using a cap is a good thing.

Incidentally the caps on FDM and IDM also tend to supress risk; and I've also got a risk management overlay in my own system which does a bit more of that and which I'll blog about in due course.

Good spot, this is a very subtle effect! And of course in practice forecast distributions are rarely gaussian and risk isn't perfectly predictable so the whole thing is an approximation anyway and +/- 25% on realised risk is about as close as you can get.

Thanks for the reply Robert - interesting points. I look forward to your post on risk management.

DeleteFor the record I realised that my reasoning above is slightly wrong because we don't discard forecasts outside +-20 but cap them, so we can't apply the formula for the truncated Gaussian, we need to use a slightly modified version (see g at https://gist.github.com/anonymous/66b06af035795af82e17dbb106d24be6). With this fix, the stddev of the forecast turns out to be 11.3 i.e. it causes us to take on 13% too much risk.

Hi Rob

ReplyDeleteCongratulations on the great first book which I thoroughly enjoyed reading. I am trying to follow the logic presented by Stephen above. Is the vol of the forecast a reflection of system risk? My understanding is that the forecast vol is a measure of the dispersion of the risk over time whereas average values in either half of the gaussian is the measure of the average risk. The fact the average abs forecast is linked to implied sdev of a gaussian forecast does not appear to my mind to change that. Finally with caps at +- 20 in a gaussian dist it seems you are compressing 10% (or more if tails are fatter) of the extremities as sdev is 12.5 (and +-20 equates +- 1.6 sd). Or have I misunderstood something?

Average absolute value (also called mean absolute deviation) and standard deviation are just two different measures of the second moment of a distribution (it's dispersion), the difference being of course that the MAD is not corrected for the mean, whilst the standard deviation is indeed standardised (plus of course the fact that one is an arithmetic mean of absolute values; the other a geometric mean).

DeleteTheir relationship will depend on the underlying distribution; whether it is mean zero, or Gaussian, or something else.

[For instance with any Gaussian distributions mean zero MAD~0.8*SD. If the mean is positive, and equal to half the standard deviation, then MAD~0.9*SD. You can have a lot of fun generating these relationships in Excel...]

So doubling the standard deviation of a mean zero Gaussian will also double it's average absolute value.

Because we scale our target vol both measures work equally well to measure the dispersion of the risk, as well as the average absolute risk. Indeed at AHL we used both MAD and SD for forecast scaling at different times.

I appreciate that intuitively they seem to mean different things; but they are inextricably linked. The reason I chose MAD rather than SD in my book was deliberately to play on the more intuitive nature of MAD as a measure of average risk - I'm sorry if it has confused things.

Anyway, I hope this clears that up a little.

As to your final point, yes with a +/- 20 cap on a Gaussian forecast we are indeed capping 10% of the values.

Hi Rob, you haven't confused things. I think I have explained myself poorly. I sort of get the link between MAD and stdev (albeit only after reading the comments above, your post on thresholding AND a little bit of googling). Its a nice way quickly to target average risk directly. What I am struggling with is Stephen's observation, "doesn't this mean that you are taking on 25% too much risk?". When looked at from the perspective of average risk, I don't see that you are. I have spent some time thinking about this, so if I have got it wrong, I apologise in advance for not getting it (aka 'being thick'):

ReplyDeleteThe system is either long or short. If we split the normal distribution of the forecast into two halves, then once properly scaled, the conditional mean forecast given a short is -10, and the conditional mean when long is +10 (unconditional mean is obviously 0). Hence the average risk in a subystem comes from a position equating to 10 forecast ‘points’ which is how you have designed your system. This implies the second moment ~ 12.5, but to my mind, the second moment doesn't impact average 'risk' which is a linear function of the averages in conditional halves of the gaussian. And this average is still 10 and therefore translates to desired average position size in your system over time. In a nutshell, I am struggling to equate the 'dispersion' of forecasts with 'average risk' in the system and this where my thinking deviates from Stephen's observation.

Hi Patrick. You've clearly thought about this very deeply, and actually I'm coming to the conclusion that you are right (and indeed Stephen is wrong!) which also means that the risk targeting in my book is exactly right. We shouldn't really think about 'risk' when measuring forecast dispersion. You are correct to say that if the average absolute value is 10, and the system is scaled accordingly, then the risk of the system will be whatever you think it is. You'd only be at risk of mis-estimating the risk if you used MAD to measure the dispersion of *instrument returns*. Thanks again for this debate, it's great to still be discovering more about the subtleties of this game.

DeleteThanks for clarifying, Rob and you're welcome. Its a tiny contribution compared to the ocean of wisdom you have imparted.

ReplyDeleteWould it be feasible to use a scaling function for this, such as:

ReplyDelete(40*cdf(0.5*(Value-Median)/InterQuartileRange))-20

(cdf = cumulative density function, and Value is your volatility normalised prediction, and the Median and InterQuartileRange calculated from an accumulating Value series.)

The above is a standard function in one of the tools I use and I've found it pretty useful for scaling problems relating to inputs/outputs for financial time series.

And superb book(s), many thanks.

Yes that is a nice alternative. The results will depend on the distributional properties of the underlying forecast; if they're normal around zero it won't really matter what you choose.

DeleteSo one word of warning about your method is that it will de-mean forecasts by their long run average; which you may not want to do. Of course it's easy to adapt it so this doesn't happen.

Many thanks for the reply. Re your point on "the distributional properties of the underlying forecast", the default function I'm using assumes a normal distribution, but I suppose (once enough predictions have accumulated) once could base the cdf on the actual distribution, rather than assuming a normal one?

ReplyDeleteMany thanks also for the warning re the long run average. Will try to resist the temptation to optimise the average length...

Assuming one has scaled individual forecasts (say of the various EWMAC speeds) to +/-20 and wishes to use bootstrapping + Markowitz (rather than handcrafting) to derive the forecast weights, how are you defining the returns generated by the individual forecasts that are needed as Markowitz inputs? The next day's percentage return? (Assuming daily bars being used.)

ReplyDeleteYou just run a trading system for each forecast individually (with vol scaling as usual to decide what positions should be) for some nominal risk target and see what the returns are.

DeleteSorry I'm being dim. "each forecast individually" = each daily forecast or each set of forecasts? So would the trading system return be the annualised Sharpe ratio for a desired risk target?

ReplyDeleteHi Rob,

ReplyDeleteI have found my historic estimates of pooled forecast scalars (pooled across 50 instrument) for carry to be more or less a straight line upwards with time (over 40+years). In order to make sure this isn't due to a bug in my code, I was wondering if this is something you might have seen in your own research?

No, haven't seen that. Sounds like a bug

DeleteI was using MAD to get forecast scalars for both carry and ewmacs and I am getting results in line with expectations on ewmacs, so the calculation seems to have been applied correctly. For carry I get a line showing an fscalar in 1970s of around 12 to about 24 last year. This whole exercise got me looking deeper into the distribution of carry (not carry returns), and its seems as you hinted above that each instrument has a unique distribution with different degrees of skewness (and sign) depending on the instrument, and this skew may be persistent in certain instruments. Since my understanding is that targeting risk using MAD requires 0 skew gaussian, I therefore tried to calculate the carry scalar directly from the s.d. along the time axis for each instrument, as an alternative, to see if this made more sense. And then applied pooling on the basis the sum of skewed distributions should approach a symmetric gaussian. Doing it this way around still gives gave me an upward path from the 1970s but stabilising at a value close to 30 over the last ten years, a number mentioned in your book of course, but it was noisy so I applied a smooth. The upward path I cannot completely get rid of. I suspect it is a feature of the increasing number and type of instruments entering the pool (presumably with lower carry vol over time). Does my latter approach seem like a reasonable approach to you?

Deletesorry -correction - I didn't mean MAD above. I was referring to the mean of the absolute values. Very different of course!

DeleteCarry clearly doesn't have a zero average value, which means that using MAD will give different answers to using Mean(absolute value) which is what I normally use. It's hard to normalise something which has a systematic bias like this (and that's even without considering non gaussian returns). Smoothing makes sense and pooling makes sense. If you plot the average scalar for each instrument independently you can verify whether your hypothesis makes sense.

DeleteIt seems I am calling bias, "skew" and average(abs), "MAD". Lol, I need to brush up on my stats. Thank you for the pointers in the meantime.

DeleteOk so pooling seems to be problematic for carry, because of the relatively wide dispersion in scalar values across instruments. I guess you did warn us. A minor technical question on how to apply these scalars in an expanding window backtest: Do you recommend scaling forecasts daily over the entire history by the daily updated values? Would you also therefore update the forecast scalar daily in a live system?

ReplyDeleteDuring a backtest yes I'd update these values (maybe not daily, but certainly annually)

DeleteIn live trading I'd probably use fixed parameters (the last value from the backtest)

Belated thank you, you answer led me to refactor my code after your answer and I only just got working again. Results are a little more sensible now. Thanks again!

DeleteI'm using bootstrapping + Markowitz to select forecast weights and am intrigued as to how you apply the costs to obtain after cost performance (as per footnote 87 of chapter nine of Systematic Trading). Assuming continuous forecasts, where (for example) a particular EWMAC parameter set's scaled values would only incur transaction costs on a zero crossover (switch long/short), do you just amortize total costs per parameter set continuously on a per day basis?

ReplyDeleteApologies - forgot to add thanks to previous message!

ReplyDeleteI'm thinking that the alternative of calculating and applying costs on a per bootstrap sample basis might distort the results. For example, if using a one year look back for returns (as an input to Markowitz) the number of zero crossovers (and hence costs) in that time could vary significantly depending on sampling. Also, if trying to allow for serial correlation (as you mention in the book in your bootstrap comments) you might take one random sample and then the next N succeeding samples (before taking another random sample and repeating the process) which could cause even greater distortion.

ReplyDeleteAt present, I'm calculating the total costs for the period over which I'm estimating forecast weights (based on the number of forecast zero crossovers) and then averaging that as a daily cost 'drag'. So the net return each day is the gross return minus that cost (with gross return obviously depending on whether the forecast from the previous day was above or below zero).

I'm not entirely convinced this is necessarily the ideal approach. Would much value your opinion/insight. Many thanks.

You should pay costs whenever your position changes, not just when the crossover passes through zero (or are you running a binary system?)

DeleteLeaving that aside for the moment I'm not convinced it will make much difference whether your costs are distributed evenly across bootstraps or unevenly; if you have enough bootstraps it will end up shifting the distribution of returns by exactly the same amount.

As it happens I use the daily cost drag approach myself when I'm doing costs in Sharpe Ratio units, so it's not a bad approach.

Many thanks - much appreciated.

ReplyDeleteSorry - just re-reading your answer and specifically "You should pay costs whenever your position changes". I'm trying to calculate forecast weights, so if I have scaled my forecasts to a range of +/-20 (i.e. not binary but continuous) then surely I'm only changing from a long to short position as I cross zero? Say on day 1 the forecast is 7, so a long position is opened, and on day 2 it's 7.5, so it's still long.

ReplyDeleteOr do you mean that even if bootstrapping for forecast weights I have to factor in the costs of increasing the long position size because the forecast has increased from 7 to 7.5? Surely that would cause a cost explosion if repeated every day?

If your forecast changes by enough then your position will change... and that will mean you will trade... and you have to pay costs.

DeleteAbsolutely - I understand - the reason for my confusion was that if using a platform like Oanda with no defined contract or microlot size, your block value is miniscule therefore your instrument value volatility and volatility scalar are similar small and so only a very small change in forecast would be needed to cause in a change in position size. Hence... Many thanks (and apologies).

ReplyDeleteExcept that you should use 'position inertia' (as I describe it in the book) so you won't do very small trades.

DeleteGood point. Thx

ReplyDeleteTo calculate a particular rule variation's trade turnover using bootstrapping - to calculate net returns and thus variation weighting - it seems there's a bit of a chicken and egg situation. You need your instrument weights to calculate your target position and thus your position inertia, but to get those you first need the correlation between subsystem returns, which you don't yet know?

ReplyDeleteAssuming I've understood that correctly (quite possibly not...) I've resorted to using a v rough approximation for the position inertia (and thus trade turnover for individual variations) by taking the individual scaled +/-20 forecasts as a proxy for position size. Therefore, a change in forecast of at least 2 is required to trigger a position adjustment and thus incur 1/2 (adjustment not reversal, so 1/2 round trip) standardised costs. Does that seem a reasonable alternative? Many thanks.

No there is no problem. You calculate the position for a given rule variation assuming it has all the capital allocated (and the amount of capital can be arbitrary). Then you calculate the turnover.

DeleteTo calculate a particular rule variation's trade turnover using bootstrapping - to calculate net returns and thus variation weighting - it seems there's a bit of a chicken and egg situation. You need your instrument weights to calculate your target position and thus your position inertia, but to get those you first need the correlation between subsystem returns, which you don't yet know?

ReplyDeleteAssuming I've understood that correctly (quite possibly not...) I've resorted to using a v rough approximation for the position inertia (and thus trade turnover for individual variations) by taking the individual scaled +/-20 forecasts as a proxy for position size. Therefore, a change in forecast of at least 2 is required to trigger a position adjustment and thus incur 1/2 (adjustment not reversal, so 1/2 round trip) standardised costs. Does that seem a reasonable alternative? Many thanks.

Sorry for the delay in responding.

DeleteI calculate turnover for a trading rule just purely looking at the turnover of the individual forecast. This won't include any turnover created by other sources; vol scaling, position rounding, etc.

Many thanks

ReplyDeleteHmm, late to the party, but a basic question. I don't understand why when calculating the E(abs(raw-forecast)) we're treating that value as an average buy? So that is our 10. What's the intuition behind that?

ReplyDeleteThis is discussed at length in 'Systematic Trading' and 'Leveraged Trading', but the choice of +10 is completely arbitrary, I just think it is nice to have forecasts in that sort of scale.

Delete